Nonlinear Systems of Equations: Newton-Raphson Method

Newton-Raphson Method

The Newton-Raphson method is the method of choice for solving nonlinear systems of equations. Many engineering software packages (especially finite element analysis software) that solve nonlinear systems of equations use the Newton-Raphson method. The derivation of the method for nonlinear systems is very similar to the one-dimensional version in the root finding section. Assume a nonlinear system of equations of the form:

![Rendered by QuickLaTeX.com \[\begin{split} f_1(x_1,x_2,\cdots,x_n)&=0\\ f_2(x_1,x_2,\cdots,x_n)&=0\\ &\vdots\\ f_n(x_1,x_2,\cdots,x_n)&=0 \end{split} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-09564db9f5b122a6ef7dd81f8b73e427_l3.png)

If the components of one iteration ![]() are known as:

are known as: ![]() , then, the Taylor expansion of the first equation around these components is given by:

, then, the Taylor expansion of the first equation around these components is given by:

![Rendered by QuickLaTeX.com \[\begin{split} f_1\left(x_1^{(i+1)},x_2^{(i+1)},\cdots,x_n^{(i+1)}\right)\approx&f_1\left(x_1^{(i)},x_2^{(i)},\cdots,x_n^{(i)}\right)+\\ &\frac{\partial f_1}{\partial x_1}\bigg|_{x^{(i)}}\left(x_1^{(i+1)}-x_1^{(i)}\right)+\frac{\partial f_1}{\partial x_2}\bigg|_{x^{(i)}}\left(x_2^{(i+1)}-x_2^{(i)}\right)+\cdots+\\ &\frac{\partial f_1}{\partial x_n}\bigg|_{x^{(i)}}\left(x_n^{(i+1)}-x_n^{(i)}\right) \end{split} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-f24fbf8fb01b29027ce0d4a3e952a9c1_l3.png)

Applying the Taylor expansion in the same manner for ![]() , we obtained the following system of linear equations with the unknowns being the components of the vector

, we obtained the following system of linear equations with the unknowns being the components of the vector ![]() :

:

![Rendered by QuickLaTeX.com \[ \left(\begin{array}{c}f_1\left(x^{(i+1)}\right)\\f_2\left(x^{(i+1)}\right)\\\vdots \\ f_n\left(x^{(i+1)}\right)\end{array}\right) = \left(\begin{array}{c}f_1\left(x^{(i)}\right)\\f_2\left(x^{(i)}\right)\\\vdots \\ f_n\left(x^{(i)}\right)\end{array}\right)+ \left(\begin{matrix}\frac{\partial f_1}{\partial x_1}\big|_{x^{(i)}} & \frac{\partial f_1}{\partial x_2}\big|_{x^{(i)}} &\cdots&\frac{\partial f_1}{\partial x_n}\big|_{x^{(i)}} \\\frac{\partial f_2}{\partial x_1}\big|_{x^{(i)}} & \frac{\partial f_2}{\partial x_2}\big|_{x^{(i)}} & \cdots & \frac{\partial f_2}{\partial x_n}\big|_{x^{(i)}}\\\vdots&\vdots&\vdots&\vdots\\ \frac{\partial f_n}{\partial x_1}\big|_{x^{(i)}} & \frac{\partial f_n}{\partial x_2}\big|_{x^{(i)}} &\cdots& \frac{\partial f_n}{\partial x_n}\big|_{x^{(i)}} \end{matrix}\right)\left(\begin{array}{c}\left(x_1^{(i+1)}-x_1^{(i)}\right)\\\left(x_2^{(i+1)}-x_2^{(i)}\right)\\\vdots\\\left(x_n^{(i+1)}-x_n^{(i)}\right)\end{array}\right) \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-3d7617d7ec3b6f0025bc5d7ff75ea76d_l3.png)

By setting the left hand side to zero (which is the desired value for the functions ![]() , then, the system can be written as:

, then, the system can be written as:

![Rendered by QuickLaTeX.com \[ \left(\begin{matrix}\frac{\partial f_1}{\partial x_1}\big|_{x^{(i)}} & \frac{\partial f_1}{\partial x_2}\big|_{x^{(i)}} &\cdots&\frac{\partial f_1}{\partial x_n}\big|_{x^{(i)}} \\\frac{\partial f_2}{\partial x_1}\big|_{x^{(i)}} & \frac{\partial f_2}{\partial x_2}\big|_{x^{(i)}} & \cdots & \frac{\partial f_2}{\partial x_n}\big|_{x^{(i)}}\\\vdots&\vdots&\vdots&\vdots\\ \frac{\partial f_n}{\partial x_1}\big|_{x^{(i)}} & \frac{\partial f_n}{\partial x_2}\big|_{x^{(i)}} &\cdots& \frac{\partial f_n}{\partial x_n}\big|_{x^{(i)}} \end{matrix}\right)\left(\begin{array}{c}\left(x_1^{(i+1)}-x_1^{(i)}\right)\\\left(x_2^{(i+1)}-x_2^{(i)}\right)\\\vdots\\\left(x_n^{(i+1)}-x_n^{(i)}\right)\end{array}\right)= -\left(\begin{array}{c}f_1\left(x^{(i)}\right)\\f_2\left(x^{(i)}\right)\\\vdots \\ f_n\left(x^{(i)}\right)\end{array}\right) \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-638c516ce27af32edb0bd2968e6d8776_l3.png)

Setting ![]() , the above equation can be written in matrix form as follows:

, the above equation can be written in matrix form as follows:

![]()

where ![]() is an

is an ![]() matrix,

matrix, ![]() is a vector of

is a vector of ![]() components and

components and ![]() is an

is an ![]() -dimensional vector with the components

-dimensional vector with the components ![]() . If

. If ![]() is invertible, then, the above system can be solved as follows:

is invertible, then, the above system can be solved as follows:

![]()

Example

Use the Newton-Raphson method with ![]() to find the solution to the following nonlinear system of equations:

to find the solution to the following nonlinear system of equations:

![]()

Solution

In addition to requiring an initial guess, the Newton-Raphson method requires evaluating the derivatives of the functions ![]() and

and ![]() . If

. If ![]() , then it has the following form:

, then it has the following form:

![]()

Assuming an initial guess of ![]() and

and ![]() , then the vector

, then the vector ![]() and the matrix

and the matrix ![]() have components:

have components:

![]()

The components of the vector ![]() can be computed as follows:

can be computed as follows:

![]()

Therefore, the new estimates for ![]() and

and ![]() are:

are:

![]()

The approximate relative error is given by:

![]()

For the second iteration the vector ![]() and the matrix

and the matrix ![]() have components:

have components:

![]()

The components of the vector ![]() can be computed as follows:

can be computed as follows:

![]()

Therefore, the new estimates for ![]() and

and ![]() are:

are:

![]()

The approximate relative error is given by:

![]()

For the third iteration the vector ![]() and the matrix

and the matrix ![]() have components:

have components:

![]()

The components of the vector ![]() can be computed as follows:

can be computed as follows:

![]()

Therefore, the new estimates for ![]() and

and ![]() are:

are:

![]()

The approximate relative error is given by:

![]()

Finally, for the fourth iteration the vector ![]() and the matrix

and the matrix ![]() have components:

have components:

![]()

The components of the vector ![]() can be computed as follows:

can be computed as follows:

![]()

Therefore, the new estimates for ![]() and

and ![]() are:

are:

![]()

The approximate relative error is given by:

![]()

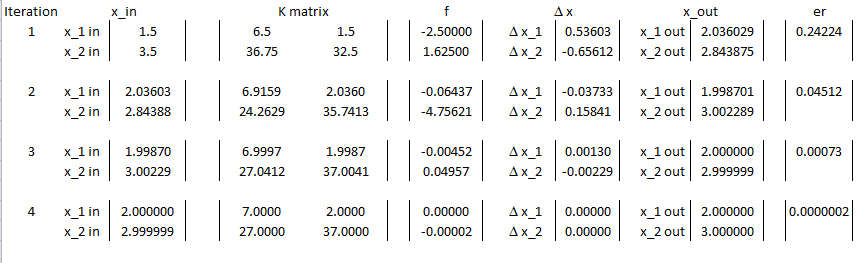

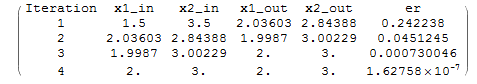

Therefore, convergence is achieved after 4 iterations which is much faster than the 9 iterations in the fixed-point iteration method. The following is the Microsoft Excel table showing the values generated in every iteration:

The Newton-Raphson method can be implemented directly in Mathematica using the “FindRoot” built-in function:

View Mathematica CodeFindRoot[{x1^2 + x1*x2 - 10 == 0, x2 + 3*x1*x2^2 == 57}, {{x1, 1.5}, {x2, 3.5}}]

# Using Sympy

import sympy as sp

x1, x2 = sp.symbols('x1 x2')

sol = sp.nsolve((x1**2 + x1*x2 - 10, x2 + 3*x1*x2**2 - 57), (x1,x2), (1.5,3.5))

print("Sympy: ",sol)

# Using Scipy's root

from scipy.optimize import root

def equations(x):

x1, x2 = x

return [x1**2 + x1*x2 - 10,

x2 + 3*x1*x2**2 - 57]

sol = root(equations, [1.5,3.5])

print("Scipy: ",sol.x)

MATLAB code for the Newton-Raphson example

MATLAB file 1 (nrsystem.m)

MATLAB file 2 (f.m)

MATLAB file 3 (K.m)

The following “While” loop in Mathematica provides an iterative procedure that implements the Newton-Raphson Method in Mathematica and produces the table shown:

View Mathematica Codex = {x1, x2};

f1 = x1^2 + x1*x2 - 10;

f2 = x2 + 3 x1*x2^2 - 57;

f = {f1, f2};

K = Table[D[f[[i]], x[[j]]], {i, 1, 2}, {j, 1, 2}];

K // MatrixForm

xtable = {{1.5, 3.5}};

xrule = {{x1 -> xtable[[1, 1]], x2 -> xtable[[1, 2]]}};

Ertable = {1}

NMax = 100;

i = 1;

eps = 0.000001

While[And[Ertable[[i]] > eps, i <= NMax],

Delta = -(Inverse[K].f) /. xrule[[i]];

xtable = Append[xtable, Delta + xtable[[Length[xtable]]]];

xrule = Append[

xrule, {x1 -> xtable[[Length[xtable], 1]],

x2 -> xtable[[Length[xtable], 2]]}];

er = Norm[Delta]/Norm[xtable[[i+1]]]; Ertable = Append[Ertable, er]; i++]

Title = {"Iteration", "x1_in", "x2_in", "x1_out", "x2_out", "er"};

T2 = Table[{i, xtable[[i, 1]], xtable[[i, 2]], xtable[[i + 1, 1]], xtable[[i + 1, 2]], Ertable[[i + 1]]}, {i, 1, Length[xtable] - 1}];

T2 = Prepend[T2, Title];

T2 // MatrixForm

import numpy as np

import sympy as sp

import pandas as pd

x1, x2 = sp.symbols('x1 x2')

x = [x1, x2]

f1 = x1**2 + x1*x2 - 10

f2 = x2 + 3*x1*x2**2 - 57

f = sp.Matrix([f1, f2])

K = sp.Matrix([[f[i].diff(x[j]) for j in range(2)] for i in range(2)])

xtable = [[1.5, 3.5]]

Ertable = [1]

NMax = 100

i = 0

eps = 0.000001

Delta = -(K.inv()*f)

while Ertable[i] > eps and i <= NMax:

xtable.append(np.add(list(Delta.subs({x1:xtable[-1][0], x2:xtable[-1][1]})), xtable[-1]))

er = np.linalg.norm(np.array(Delta.subs({x1:xtable[-2][0], x2:xtable[-2][1]})).astype(np.float64))/np.linalg.norm(np.array(xtable[i+1]).astype(np.float64))

Ertable.append(er)

i+=1

T2 = [[i + 1, xtable[i][0], xtable[i][1], xtable[i + 1][0], xtable[i + 1][1], Ertable[i + 1]] for i in range(len(xtable) - 1)]

T2 = pd.DataFrame(T2, columns=["Iteration", "x1_in", "x2_in", "x1_out", "x2_out", "er"])

display(T2)

Thank you Dr. Samer and Dr. Linsey and the entire team, for a great and very informative lectures notes, educational videos.

My best and sincere regards… Naim

Thank you very much for these good notes. Kindly send me the pdf of the entire notes if possible. Be blessed

Can we solve n equations with n unknowns using this method?

Is there any Mathematica code to solve system of n equations with n unknowns? Let us know.

numerical analysis