Open Methods: Fixed-Point Iteration Method

The Method

Let ![]() . A fixed point of

. A fixed point of ![]() is defined as

is defined as ![]() such that

such that ![]() . If

. If ![]() , then a fixed point of

, then a fixed point of ![]() is the intersection of the graphs of the two functions

is the intersection of the graphs of the two functions ![]() and

and ![]() .

.

The fixed-point iteration method relies on replacing the expression ![]() with the expression

with the expression ![]() . Then, an initial guess for the root

. Then, an initial guess for the root ![]() is assumed and input as an argument for the function

is assumed and input as an argument for the function ![]() . The output

. The output ![]() is then the estimate

is then the estimate ![]() . The process is then iterated until the output

. The process is then iterated until the output ![]() . The following is the algorithm for the fixed-point iteration method. Assuming

. The following is the algorithm for the fixed-point iteration method. Assuming ![]() ,

, ![]() , and maximum number of iterations

, and maximum number of iterations ![]() :

:

Set ![]() , and calculate

, and calculate ![]() and compare with

and compare with ![]() . If

. If ![]() or if

or if ![]() , then stop the procedure, otherwise, repeat.

, then stop the procedure, otherwise, repeat.

The Babylonian method for finding roots described in the introduction section is a prime example of the use of this method. If we seek to find the solution for the equation ![]() or

or ![]() , then a fixed-point iteration scheme can be implemented by writing this equation in the form:

, then a fixed-point iteration scheme can be implemented by writing this equation in the form:

![]()

Example

Consider the function ![]() . We wish to find the root of the equation

. We wish to find the root of the equation ![]() , i.e.,

, i.e., ![]() . The expression can be rearranged to the fixed-point iteration form

. The expression can be rearranged to the fixed-point iteration form ![]() and an initial guess

and an initial guess ![]() can be used. The tolerance

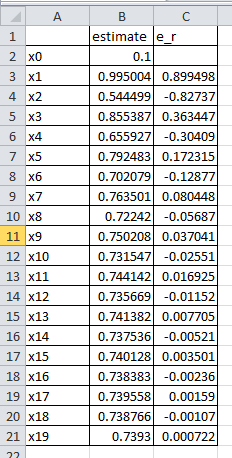

can be used. The tolerance ![]() is set to 0.001. The following is the Microsoft Excel table showing that the tolerance is achieved after 19 iterations:

is set to 0.001. The following is the Microsoft Excel table showing that the tolerance is achieved after 19 iterations:

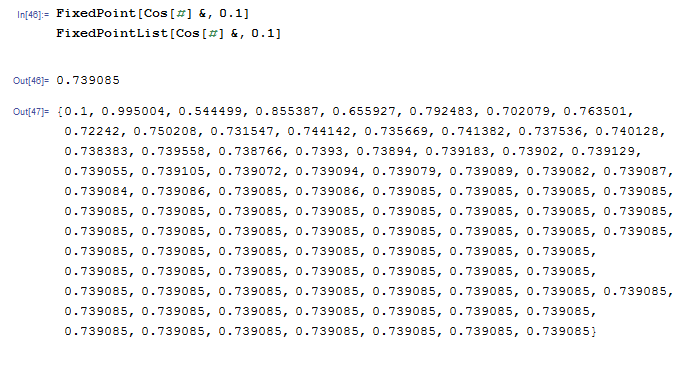

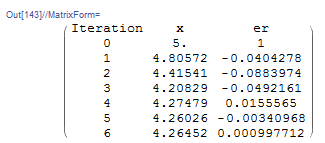

Mathematica has a built-in algorithm for the fixed-point iteration method. The function “FixedPoint[f,Expr,n]” applies the fixed-point iteration method ![]() with the initial guess being “Expr” with a maximum number of iterations “n”. As well, the function “FixedPointList[f,Expr,n]” returns the list of applying the function “n” times. If “n” is omitted, then the software applies the fixed-point iteration method until convergence is achieved. Here is a snapshot of the code and the output for the fixed-point iteration

with the initial guess being “Expr” with a maximum number of iterations “n”. As well, the function “FixedPointList[f,Expr,n]” returns the list of applying the function “n” times. If “n” is omitted, then the software applies the fixed-point iteration method until convergence is achieved. Here is a snapshot of the code and the output for the fixed-point iteration ![]() . The software finds the solution

. The software finds the solution ![]() . Compare the list below with the Microsoft Excel sheet above.

. Compare the list below with the Microsoft Excel sheet above.

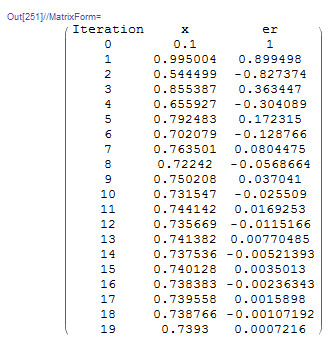

Alternatively, simple code can be written in Mathematica with the following output

x = {0.1};

er = {1};

es = 0.001;

MaxIter = 100;

i = 1;

While[And[i <= MaxIter, Abs[er[[i]]] > es], xnew = Cos[x[[i]]]; x = Append[x, xnew]; ernew = (xnew - x[[i]])/xnew; er = Append[er, ernew]; i++];

T = Length[x];

SolutionTable = Table[{i - 1, x[[i]], er[[i]]}, {i, 1, T}];

SolutionTable1 = {"Iteration", "x", "er"};

T = Prepend[SolutionTable, SolutionTable1];

T // MatrixForm

import numpy as np import pandas as pd x = [0.1] er = [1] es = 0.001 MaxIter = 100 i = 0 while i <= MaxIter and abs(er[i]) > es: xnew = np.cos(x[i]) x.append(xnew) ernew = (xnew - x[i])/xnew er.append(ernew) i+=1 SolutionTable = [[i, x[i], er[i]] for i in range(len(x))] pd.DataFrame(SolutionTable, columns=["Iteration", "x", "er"])

The following MATLAB code runs the fixed-point iteration method to find the root of a function ![]() with initial guess

with initial guess ![]() . The value of the estimate and approximate relative error at each iteration is displayed in the command window. Additionally, two plots are produced to visualize how the iterations and the errors progress. Your function should be written in the form

. The value of the estimate and approximate relative error at each iteration is displayed in the command window. Additionally, two plots are produced to visualize how the iterations and the errors progress. Your function should be written in the form ![]() . Then call the fixed point iteration function with fixedpointfun2(@(x) g(x), x0). For example, try fixedpointfun2(@(x) cos(x), 0.1)

. Then call the fixed point iteration function with fixedpointfun2(@(x) g(x), x0). For example, try fixedpointfun2(@(x) cos(x), 0.1)

Convergence

The fixed-point iteration method converges easily if in the region of interest we have ![]() . Otherwise, it does not converge. Here is an example where the fixed-point iteration method fails to converge.

. Otherwise, it does not converge. Here is an example where the fixed-point iteration method fails to converge.

Example

Consider the function ![]() . To find the root of the equation

. To find the root of the equation ![]() , the expression can be converted into the fixed-point iteration form as:

, the expression can be converted into the fixed-point iteration form as:![]() . Implementing the fixed-point iteration procedure shows that this expression almost never converges but oscillates:

. Implementing the fixed-point iteration procedure shows that this expression almost never converges but oscillates:

Clear[x, g]

x = {-0.9};

er = {1};

es = 0.001;

MaxIter = 100;

i = 1;

g[x_] := (Sin[5 x] + Cos[2 x]) + x

While[And[i <= MaxIter, Abs[er[[i]]] > es],xnew = g[x[[i]]]; x = Append[x, xnew]; ernew = (xnew - x[[i]])/xnew;er = Append[er, ernew]; i++];

T = Length[x];

SolutionTable = Table[{i - 1, x[[i]], er[[i]]}, {i, 1, T}];

SolutionTable1 = {"Iteration", "x", "er"};

T = Prepend[SolutionTable, SolutionTable1];

T // MatrixForm

import numpy as np

import pandas as pd

x = [-0.9]

er = [1]

es = 0.001

MaxIter = 100

i = 0

def g(x): return np.sin(5*x) + np.cos(2*x) + x

while i < MaxIter and abs(er[i]) > es:

xnew = g(x[i])

x.append(xnew)

ernew = (xnew - x[i])/xnew

er.append(ernew)

i+=1

SolutionTable = [[i, x[i], er[i]] for i in range(len(x))]

pd.set_option("display.max_rows", None, "display.max_columns", None)

pd.DataFrame(SolutionTable, columns=["Iteration", "x", "er"])

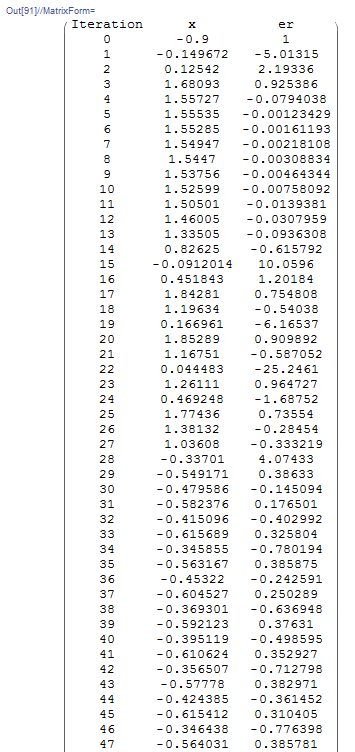

The following is the output table showing the first 45 iterations. The value of the error oscillates and never decreases:

The expression ![]() can be converted to different forms

can be converted to different forms ![]() . For example, assuming

. For example, assuming ![]() :

:

![]()

If this expression is used, the fixed-point iteration method does converge depending on the choice of ![]() . For example, setting

. For example, setting ![]() gives the estimate for the root

gives the estimate for the root ![]() with the required accuracy:

with the required accuracy:

View Mathematica Code

View Mathematica CodeClear[x, g]

x = {5.};

er = {1};

es = 0.001;

MaxIter = 100;

i = 1;

g[x_] := (Sin[5 x] + Cos[2 x])/x + x

While[And[i <= MaxIter, Abs[er[[i]]] > es], xnew = g[x[[i]]]; x = Append[x, xnew]; ernew = (xnew - x[[i]])/xnew; er = Append[er, ernew]; i++];

T = Length[x];

SolutionTable = Table[{i - 1, x[[i]], er[[i]]}, {i, 1, T}];

SolutionTable1 = {"Iteration", "x", "er"};

T = Prepend[SolutionTable, SolutionTable1];

T // MatrixForm

import numpy as np import pandas as pd x = [5] er = [1] es = 0.001 MaxIter = 100 i = 0 def g(x): return (np.sin(5*x) + np.cos(2*x))/x + x while i <= MaxIter and abs(er[i]) > es: xnew = g(x[i]) x.append(xnew) ernew = (xnew - x[i])/xnew er.append(ernew) i+=1 SolutionTable = [[i, x[i], er[i]] for i in range(len(x))] pd.DataFrame(SolutionTable, columns=["Iteration", "x", "er"])

Obviously, unlike the bracketing methods, this open method cannot find a root in a specific interval. The root is a function of the initial guess ![]() and the form

and the form ![]() , but the user has no other way of forcing the root to be within a specific interval. It is very difficult, for example, to use the fixed-point iteration method to find the roots of the expression

, but the user has no other way of forcing the root to be within a specific interval. It is very difficult, for example, to use the fixed-point iteration method to find the roots of the expression ![]() in the interval

in the interval ![]() .

.

Analysis of Convergence of the Fixed-Point Method

The objective of the fixed-point iteration method is to find the true value ![]() that satisfies

that satisfies ![]() . In each iteration we have the estimate

. In each iteration we have the estimate ![]() . Using the mean value theorem, we can write the following expression:

. Using the mean value theorem, we can write the following expression:

![]()

for some ![]() in the interval between

in the interval between ![]() and the true value

and the true value ![]() . Replacing

. Replacing ![]() and

and ![]() in the above expression yields:

in the above expression yields:

![]()

The error after iteration ![]() is equal to

is equal to ![]() while that after iteration

while that after iteration ![]() is equal to

is equal to ![]() . Therefore, the above expression yields:

. Therefore, the above expression yields:

![]()

For the error to reduce after each iteration, the first derivative of ![]() , namely

, namely ![]() , should be bounded by 1 in the region of interest (around the required root):

, should be bounded by 1 in the region of interest (around the required root):

![]()

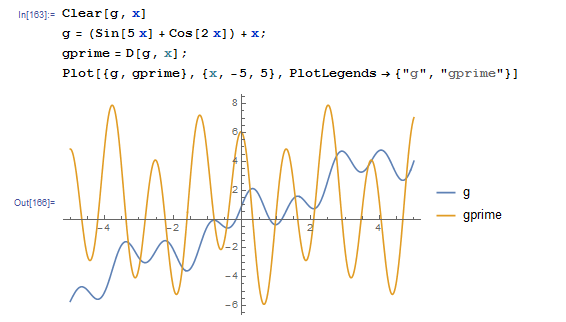

We can now try to understand why, in the previous example, the expression ![]() does not converge. When we plot

does not converge. When we plot ![]() and

and ![]() we see that

we see that ![]() oscillates rapidly with values higher than 1:

oscillates rapidly with values higher than 1:

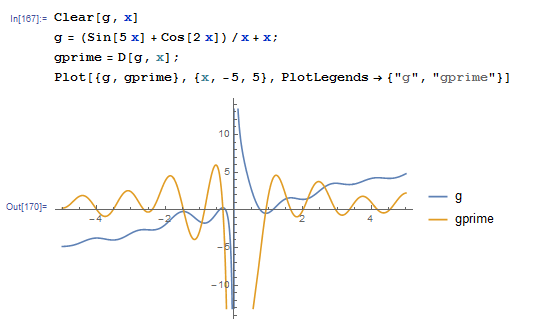

On the other hand, the expression ![]() converges for roots that are away from zero. When we plot

converges for roots that are away from zero. When we plot ![]() and

and ![]() we see that the oscillations in

we see that the oscillations in ![]() decrease when

decrease when ![]() is away from zero and

is away from zero and ![]() is bounded by 1 in some regions:

is bounded by 1 in some regions:

Example

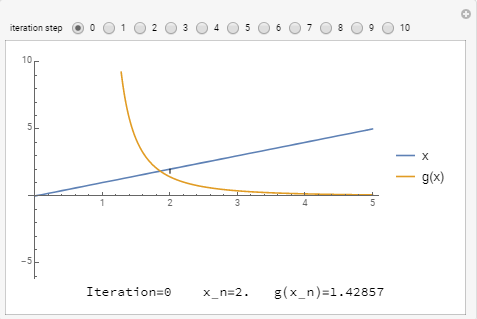

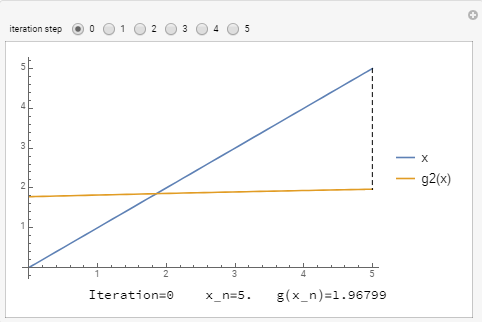

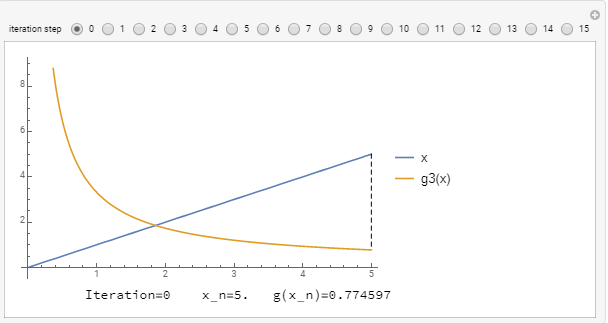

In this example, we will visualize the example of finding the root of the expression ![]() . There are three different forms for the fixed-point iteration scheme:

. There are three different forms for the fixed-point iteration scheme:

![Rendered by QuickLaTeX.com \[\begin{split}x&=g_1(x)=\frac{10}{x^3-1}\\x&=g_2(x)=(x+10)^{1/4}\\x&=g_3(x)=\frac{(x+10)^{1/2}}{x}\end{split}\]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-46733bd4fcce39e4469af21028475e5f_l3.png)

To visualize the convergence, notice that if we plot the separate graphs of the function ![]() and the function

and the function ![]() , then, the root is the point of intersection when

, then, the root is the point of intersection when ![]() . For

. For ![]() , the slope

, the slope ![]() is not bounded by 1 and so, the scheme diverges no matter what

is not bounded by 1 and so, the scheme diverges no matter what ![]() is. Here we start with

is. Here we start with ![]() :

:

For ![]() , the slope

, the slope ![]() is bounded by 1 and so, the scheme converges really fast no matter what

is bounded by 1 and so, the scheme converges really fast no matter what ![]() is. Here we start with

is. Here we start with ![]() :

:

For ![]() , the slope

, the slope ![]() is bounded by 1 and so, the scheme converges but slowly. Here we start with

is bounded by 1 and so, the scheme converges but slowly. Here we start with ![]() :

:

The following is the Mathematica code used to generate one of the tools above:

View Mathematica CodeManipulate[

x = {5.};

er = {1};

es = 0.001;

MaxIter = 20;

i = 1;

g[x_] := (x + 10)^(1/4);

While[And[i <= MaxIter, Abs[er[[i]]] > es],xnew = g[x[[i]]]; x = Append[x, xnew]; ernew = (xnew - x[[i]])/xnew;er = Append[er, ernew]; i++];

T1 = Length[x];

SolutionTable = Table[{i - 1, x[[i]], er[[i]]}, {i, 1, T1}];

SolutionTable1 = {"Iteration", "x", "er"};

T = Prepend[SolutionTable, SolutionTable1];

LineTable1 = Table[{{T[[i, 2]], T[[i, 2]]}, {T[[i, 2]], g[T[[i, 2]]]}}, {i, 2, n}];

LineTable2 = Table[{{T[[i + 1, 2]], T[[i + 1, 2]]}, {T[[i, 2]], g[T[[i, 2]]]}}, {i, 2, n - 1}];

Grid[{{Plot[{x, g[x]}, {x, 0, 5}, PlotLegends -> {"x", "g3(x)"},ImageSize -> Medium, Epilog -> {Dashed, Line[LineTable1], Line[LineTable2]}]}, {Row[{"Iteration=", n - 2, " x_n=", T[[n, 2]], " g(x_n)=", g[T[[n, 2]]]}]}}], {n, 2, Length[T], 1}]

import numpy as np

import matplotlib.pyplot as plt

from ipywidgets.widgets import interact

def g(x): return (x + 10)**(1/4)

@interact(n=(2,6,1))

def update(n=1):

x = [5]

er = [1]

es = 0.001

MaxIter = 20

i = 0

while i <= MaxIter and abs(er[i]) > es:

xnew = g(x[i])

x.append(xnew)

ernew = (xnew - x[i])/xnew

er.append(ernew)

i+=1

SolutionTable = [[i, x[i], er[i]] for i in range(len(x))]

LineTable = [[],[]]

for i in range(n-1):

LineTable[0].extend([SolutionTable[i][1], SolutionTable[i][1]])

LineTable[1].extend([SolutionTable[i][1], g(SolutionTable[i][1])])

x_val = np.arange(0,5.1,0.1)

plt.plot(x_val,x_val, label="x")

plt.plot(x_val,g(x_val), label="g3(x)")

plt.plot(LineTable[0],LineTable[1],'k--')

plt.legend(); plt.grid(); plt.show()

print("Iteration =",n-2," x_n =",round(SolutionTable[n-2][1],5)," g(x_n) =",round(g(SolutionTable[n-2][1]),5))

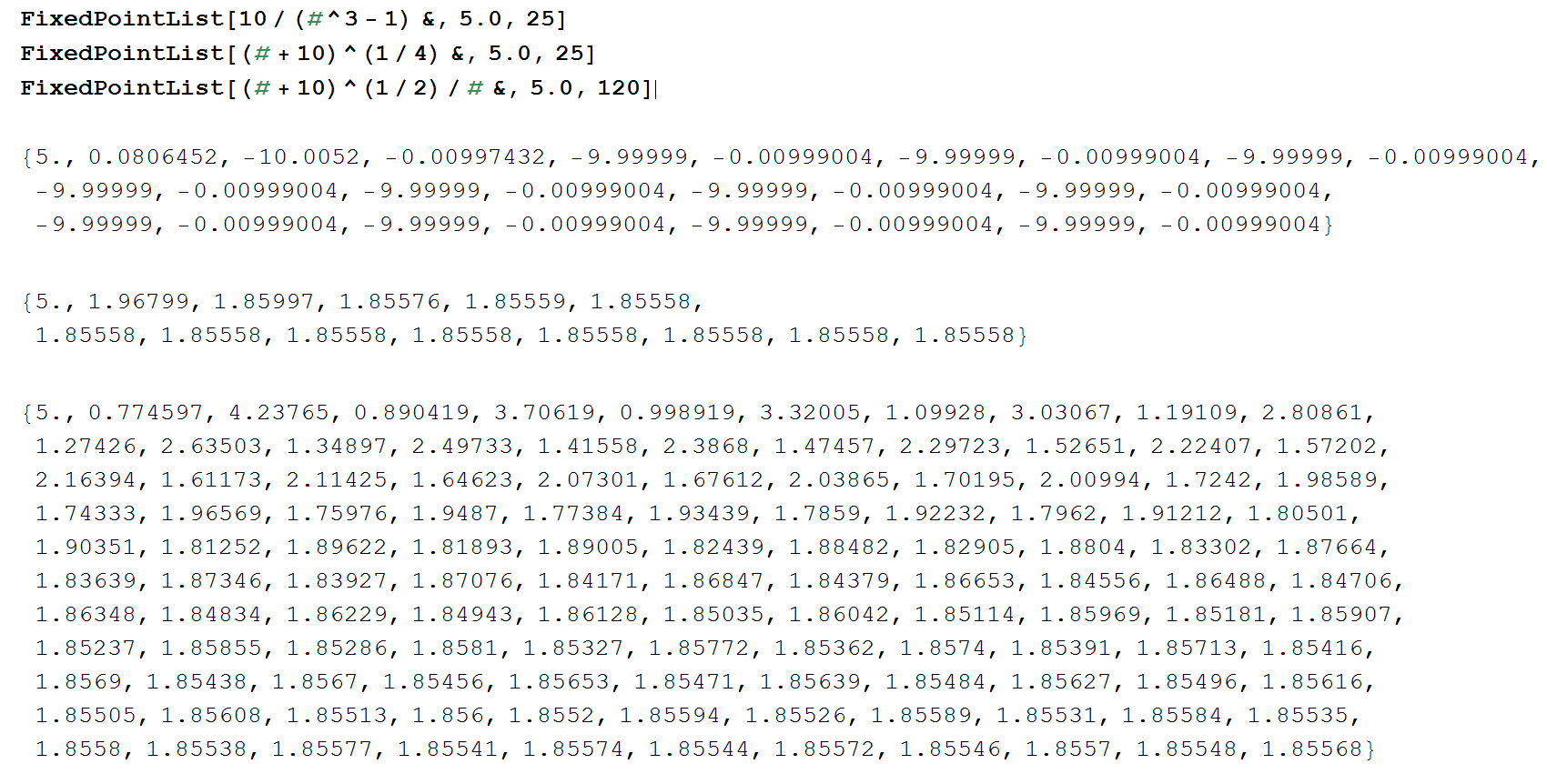

The following shows the output if we use the built-in fixed-point iteration function for each of ![]() ,

, ![]() , and

, and ![]() .

. ![]() oscillates and so, it will never converge.

oscillates and so, it will never converge. ![]() converges really fast (3 to 4 iterations).

converges really fast (3 to 4 iterations). ![]() converges really slow, taking up to 120 iterations to converge.

converges really slow, taking up to 120 iterations to converge.

Lecture video

Very interesting.