Numerical Integration: Romberg’s Method

Romberg’s Method

Richardson Extrapolation

Romberg’s method applied a technique called the Richardson extrapolation to the trapezoidal integration rule (and can be applied to any of the rules above). The general Richardson extrapolation technique is a powerful method that combines two or more less accurate solutions to obtain a highly accurate one. The essential ingredient of the method is the knowledge of the order of the truncation error. Assuming a numerical technique approximates the value of ![]() by choosing the value of

by choosing the value of ![]() , and calculating an estimate

, and calculating an estimate ![]() according to the equation:

according to the equation:

![]()

Where ![]() is a constant whose value does not need to be known and

is a constant whose value does not need to be known and ![]() . If a smaller

. If a smaller ![]() is chosen with

is chosen with ![]() , then the new estimate for

, then the new estimate for ![]() is

is ![]() and the equation becomes:

and the equation becomes:

![]()

Multiplying the second equation by ![]() and subtracting the first equation yields:

and subtracting the first equation yields:

![]()

Therefore:

![]()

In other words, if the first error term in a method is directly proportional to ![]() , then, by combining two values of

, then, by combining two values of ![]() , we can get an estimate whose error term is directly proportional to

, we can get an estimate whose error term is directly proportional to ![]() . The above equation can also be written as:

. The above equation can also be written as:

![Rendered by QuickLaTeX.com \[ A=\frac{(t^{k_1}A^*\left(\frac{h_1}{t}\right)-A^*(h_1))}{(t^{k_1}-1)}+\mathcal{O}(h_1^{k_2}) \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-92d5a28f8ad10e885da9a0c1eab3209c_l3.png)

Romberg’s Method Using the Trapezoidal Rule

As shown above the truncation error in the trapezoidal rule is ![]() . If the trapezoidal numerical integration scheme is applied for a particular value of

. If the trapezoidal numerical integration scheme is applied for a particular value of ![]() and then applied again for half that value (i.e.,

and then applied again for half that value (i.e., ![]() ), then, substituting in the equation above yields:

), then, substituting in the equation above yields:

![]()

It should be noted that for the trapezoidal rule, ![]() is equal to 4, i.e., the error term using this method is

is equal to 4, i.e., the error term using this method is ![]() . To explicitly observe this, consider the error analysis for the trapezoidal rule. As proved in the section of Trapezoidal Rule, the error analysis led to the following expression,

. To explicitly observe this, consider the error analysis for the trapezoidal rule. As proved in the section of Trapezoidal Rule, the error analysis led to the following expression,

![Rendered by QuickLaTeX.com \[ \begin{split} \int_a^b f(x)\mathrm dx &=\frac{h}{2}\Big ( f(a) + f(b) +2\sum_{j=1}^{n-1} f(x_j)\Big )\\ & - (b-a)\frac{h^2}{12}f''(\xi)- (b-a)\frac{h^4}{480}f^{(4)}(\eta) + \cdots \end{split} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-c15dc6b4ea432fc50d693e8286bb8908_l3.png)

Letting ![]() ,

, ![]() ,

, ![]() and

and ![]() , we can write:

, we can write:

![]()

showing that the error term is ![]() .

.

This method can be applied successively by halving the value of ![]() to obtain an error estimate that is

to obtain an error estimate that is ![]() . Assuming that the trapezoidal integration is available for

. Assuming that the trapezoidal integration is available for ![]() ,

, ![]() ,

, ![]() , and

, and ![]() , then the first two can be used to find an estimate

, then the first two can be used to find an estimate ![]() that is

that is ![]() , and the last two can be used to find an estimate

, and the last two can be used to find an estimate ![]() that is

that is ![]() . Applying the Richardson extrapolation equation to

. Applying the Richardson extrapolation equation to ![]() and

and ![]() and noticing that

and noticing that ![]() in this case produce the following estimate for

in this case produce the following estimate for ![]() :

:

![]()

The process can be extended even further to find an estimate that is ![]() . In general, the process can be written as follows:

. In general, the process can be written as follows:

![]()

where ![]() indicates the more accurate integral, while

indicates the more accurate integral, while ![]() indicates the less accurate integral,

indicates the less accurate integral, ![]() correspond to the calculations with error terms that correspond to

correspond to the calculations with error terms that correspond to ![]() , respectively. The above equation is applied for

, respectively. The above equation is applied for ![]() . For example, setting

. For example, setting ![]() and

and ![]() yields:

yields:

![]()

Similarly, setting ![]() and

and ![]() yields:

yields:

![]()

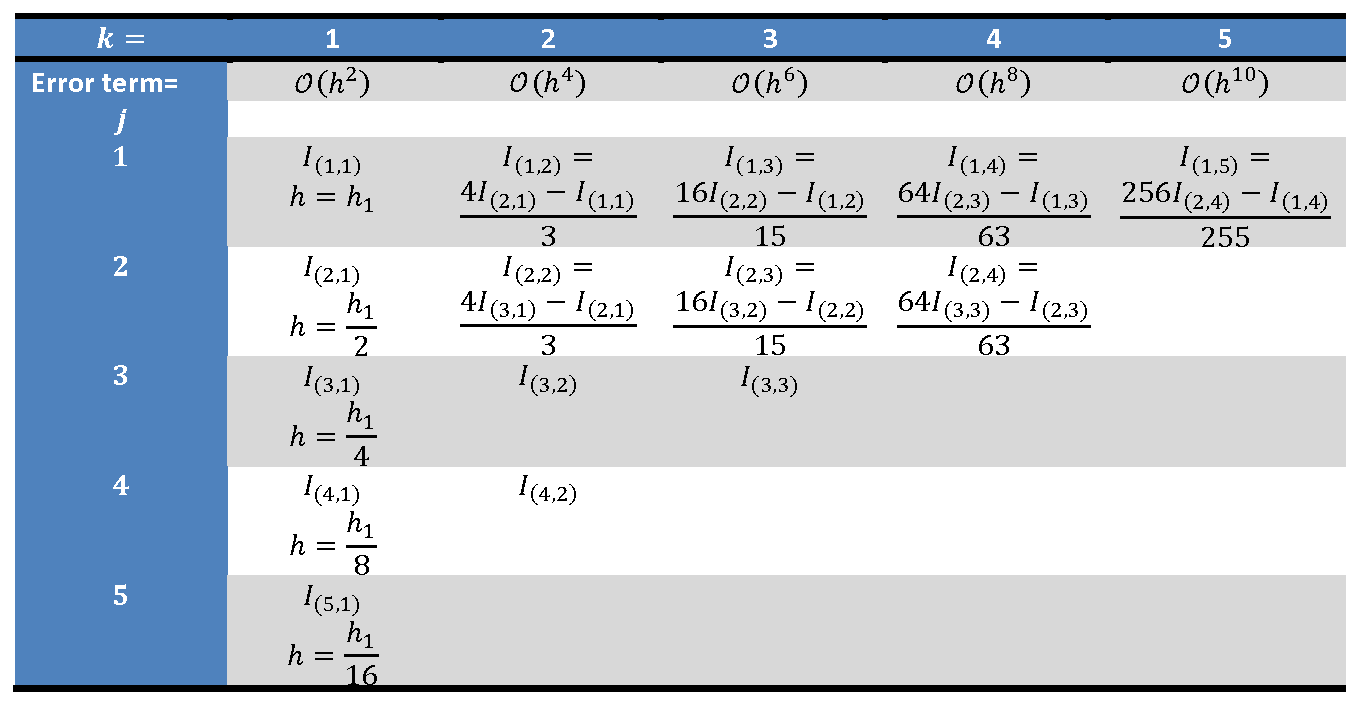

The following table sketches how the process is applied to obtain an estimate that is ![]() using this algorithm. The first column corresponds to evaluating the integral for the values of

using this algorithm. The first column corresponds to evaluating the integral for the values of ![]() ,

, ![]() ,

, ![]() ,

, ![]() , and

, and ![]() . These correspond to

. These correspond to ![]() ,

, ![]() ,

, ![]() ,

, ![]() , and

, and ![]() trapezoids, respectively. The equation above is then used to fill the remaining values in the table.

trapezoids, respectively. The equation above is then used to fill the remaining values in the table.

A stopping criterion for this algorithm can be set as:

![]()

The following Mathematica code provides a procedural implementation of the Romberg’s method using the trapezoidal rule. The first procedure “IT[f,a,b,n]” provides the numerical estimate for the integral of ![]() from

from ![]() to

to ![]() with

with ![]() being the number of trapezoids. The “RI[f,a,b,k,n1]” procedure builds the Romberg’s method table shown above up to

being the number of trapezoids. The “RI[f,a,b,k,n1]” procedure builds the Romberg’s method table shown above up to ![]() columns.

columns. ![]() provides the number of subdivisions (number of trapezoids) in the first entry in the table

provides the number of subdivisions (number of trapezoids) in the first entry in the table ![]() .

.

IT[f_, a_, b_, n_] := (h = (b - a)/n; Sum[(f[a + (i - 1)*h] + f[a + i*h])*h/2, {i, 1, n}])

RI[f_, a_, b_, k_,n1_] := (RItable = Table["N/A", {i, 1, k}, {j, 1, k}];

Do[RItable[[i, 1]] = IT[f, a, b, 2^(i - 1)*n1], {i, 1, k}];

Do[RItable[[j, ik]] = (2^(2 (ik - 1)) RItable[[j + 1, ik - 1]] - RItable[[j, ik - 1]])/(2^(2 (ik - 1)) - 1), {ik, 2, k}, {j, 1,k - ik + 1}];

ERtable = Table[(RItable[[1, i + 1]] - RItable[[1, i]])/RItable[[1, i + 1]], {i, 1, k - 1}];

{RItable, ERtable})

a = 0.0;

b = 2;

k=3;

n1=1;

f[x_] := E^x

Integrate[f[x], {x, a, b}]

s = RI[f, a, b, k,n1];

s[[1]] // MatrixForm

s[[2]] // MatrixForm

import numpy as np

from scipy import integrate

def IT(f, a, b, n):

h = (b - a)/n

return sum([(f(a + i*h) + f(a + (i + 1)*h))*h/2 for i in range(n)])

def RI(f, a, b, k, n1):

RItable = [[None for i in range(k)] for j in range(k)]

for i in range(k):

RItable[i][0] = IT(f, a, b, 2**i*n1)

for ik in range(1, k):

for j in range(k - ik):

RItable[j][ik] = (2**(2*ik)*RItable[j + 1][ik - 1] - RItable[j][ik - 1])/(2**(2*ik) - 1)

ERtable = [(RItable[0][i + 1] - RItable[0][i])/RItable[0][i + 1] for i in range(k - 1)]

return [RItable, ERtable]

a = 0.0

b = 2

k = 3

n1 = 1

def f(x): return np.exp(x)

y, error = integrate.quad(f, a, b)

print("Integrate: ",y)

s = RI(f, a, b, k,n1)

display("RItable:",s[0])

display("ERtable:",s[1])

Example 1

As shown in the example above in the trapezoidal rule, when 71 trapezoids were used, the estimate for the integral of ![]() from

from ![]() to

to ![]() was

was ![]() with an absolute error of

with an absolute error of ![]() . Using the Romberg’s method, find the depth

. Using the Romberg’s method, find the depth ![]() starting with

starting with ![]() so that the estimate for the same integral has the same or less absolute error

so that the estimate for the same integral has the same or less absolute error ![]() . Compare the number of computations required by the Romberg’s method to that required by the traditional trapezoidal rule to obtain an estimate with the same absolute error.

. Compare the number of computations required by the Romberg’s method to that required by the traditional trapezoidal rule to obtain an estimate with the same absolute error.

Solution

First, we will start with ![]() . For that, we will need to compute the integral numerically using the trapezoidal rule for a chosen

. For that, we will need to compute the integral numerically using the trapezoidal rule for a chosen ![]() and then for

and then for ![]() to fill in the entries

to fill in the entries ![]() and

and ![]() in the Romberg’s method table.

in the Romberg’s method table.

Assuming ![]() , i.e., 1 trapezoid, the value of

, i.e., 1 trapezoid, the value of ![]() . Assuming a trapezoid width of

. Assuming a trapezoid width of ![]() , i.e., two trapezoids on the interval, the value of

, i.e., two trapezoids on the interval, the value of ![]() . Using the Romberg table, the value of

. Using the Romberg table, the value of ![]() can be computed as:

can be computed as:

![]()

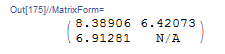

with a corresponding absolute error of ![]() .

.

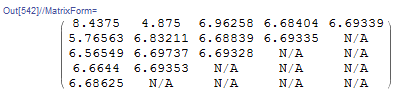

The output Romberg table with depth ![]() has the following form:

has the following form:

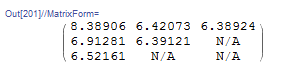

Next, to fill in the table up to depth ![]() , the value of

, the value of ![]() in the table needs to be calculated. This value corresponds to the calculation of the trapezoidal rule with a trapezoid width of

in the table needs to be calculated. This value corresponds to the calculation of the trapezoidal rule with a trapezoid width of ![]() , i.e., 4 trapezoids on the whole interval. Using the trapezoidal rule, we get

, i.e., 4 trapezoids on the whole interval. Using the trapezoidal rule, we get ![]() . The remaining values in the Romberg table can be calculated as follows:

. The remaining values in the Romberg table can be calculated as follows:

![Rendered by QuickLaTeX.com \[\begin{split} I_{2,2}&=\frac{4I_{3,1}-I_{2,1}}{3}=\frac{4\times 6.52161-6.91281}{3}=6.39121\\ I_{1,3}&=\frac{16I_{2,2}-I_{1,2}}{15}=\frac{16\times 6.39121-6.42073}{15}=6.38924\\ \end{split} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-877ec4749d165e0524a75a2e8bca58f6_l3.png)

with the absolute error in ![]() that is less than the specified error:

that is less than the specified error: ![]() . The output Romberg table with depth

. The output Romberg table with depth ![]() has the following form:

has the following form:

Number of Computations

For the same error, the traditional trapezoidal rule would have required 71 trapezoids. If we assume each trapezoid is one computation, the Romberg’s method requires computations of 1 trapezoid in ![]() , two trapezoids in

, two trapezoids in ![]() , and 4 trapezoids in

, and 4 trapezoids in ![]() with a total of 7 corresponding computations. I.e., almost one tenth of the computational resources is required by the Romberg’s method in this example to produce the same level of accuracy!

with a total of 7 corresponding computations. I.e., almost one tenth of the computational resources is required by the Romberg’s method in this example to produce the same level of accuracy!

The following Mathematica code was used to produce the above calculations.

View Mathematica CodeIT[f_, a_, b_, n_] := (h = (b - a)/n; Sum[(f[a + (i - 1)*h] + f[a + i*h])*h/2, {i, 1, n}])

RI[f_, a_, b_, k_, n1_] := (RItable = Table["N/A", {i, 1, k}, {j, 1, k}];

Do[RItable[[i, 1]] = IT[f, a, b, 2^(i - 1)*n1], {i, 1, k}];

Do[RItable[[j,ik]] = (2^(2 (ik - 1)) RItable[[j + 1, ik - 1]] - RItable[[j, ik - 1]])/(2^(2 (ik - 1)) - 1), {ik, 2, k}, {j, 1, k - ik + 1}];

ERtable = Table[(RItable[[1, i + 1]] - RItable[[1, i]])/RItable[[1, i + 1]], {i, 1, k - 1}];

{RItable, ERtable})

a = 0.0;

b = 2;

(*Depth of Romberg Table*)

k = 3;

n1 = 1;

f[x_] := E^x

Itrue = Integrate[f[x], {x, a, b}]

s = RI[f, a, b, k, n1];

s[[1]] // MatrixForm

s[[2]] // MatrixForm

ErrorTable = Table[If[RItable[[i, j]] == "N/A", "N/A", Abs[Itrue - RItable[[i, j]]]], {i, 1, Length[RItable]}, {j, 1, Length[RItable]}];

ErrorTable // MatrixForm

import numpy as np

from scipy import integrate

def IT(f, a, b, n):

h = (b - a)/n

return sum([(f(a + i*h) + f(a + (i + 1)*h))*h/2 for i in range(int(n))])

def RI(f, a, b, k, n1):

RItable = [[None for i in range(k)] for j in range(k)]

for i in range(k):

RItable[i][0] = IT(f, a, b, 2**i*n1)

for ik in range(1, k):

for j in range(k - ik):

RItable[j][ik] = (2**(2*ik)*RItable[j + 1][ik - 1] - RItable[j][ik - 1])/(2**(2*ik) - 1)

ERtable = [(RItable[0][i + 1] - RItable[0][i])/RItable[0][i + 1] for i in range(k - 1)]

return [RItable, ERtable]

a = 0.0

b = 2

# Depth of Romberg Table

k = 3

n1 = 1

def f(x): return np.exp(x)

Itrue, error = integrate.quad(f, a, b)

print("Itrue: ",Itrue)

s = RI(f, a, b, k, n1)

RItable = s[0]

display("RItable:",s[0])

display("ERtable:",s[1])

ErrorTable = [[None if RItable[i][j] == None else abs(Itrue - RItable[i][j]) for j in range(len(RItable))] for i in range(len(RItable))]

display("ErrorTable",ErrorTable)

Example 2

Consider the integral:

![]()

Using the trapezoidal rule, draw a table with the following columns: ![]() ,

, ![]() ,

, ![]() ,

, ![]() , and

, and ![]() , where

, where ![]() is the number of trapezoids,

is the number of trapezoids, ![]() is the width of each trapezoid,

is the width of each trapezoid, ![]() is the estimate using the trapezoidal rule,

is the estimate using the trapezoidal rule, ![]() is the true value of the integral, and

is the true value of the integral, and ![]() is the absolute value of the error. Use

is the absolute value of the error. Use ![]() . Similarly, provide the Romberg table with depth

. Similarly, provide the Romberg table with depth ![]() . Compare the number of computations in each and the level of accuracy.

. Compare the number of computations in each and the level of accuracy.

Solution

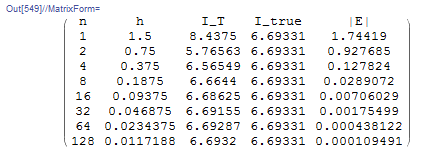

The true value of the integral can be computed using Mathematica as ![]() . For the trapezoidal rule, the following Mathematica code is used to produce the required table for the specified values of

. For the trapezoidal rule, the following Mathematica code is used to produce the required table for the specified values of ![]() :

:

IT[f_, a_, b_, n_] := (h = (b - a)/n; Sum[(f[a + (i - 1)*h] + f[a + i*h])*h/2, {i, 1, n}])

a = 0.0;

b = 1.5;

f[x_] := 2 + 2 x + x^2 + Sin[2 Pi*x] + Cos[2 Pi*x/0.5]

Title = {"n", "h", "I_T", "I_true", "|E|"};

Ii = Table[{2^(i - 1), (b - a)/2^(i - 1), ss = IT[f, a, b, 2^(i - 1)], Itrue, Abs[Itrue - ss]}, {i, 1, 8}];

Ii = Prepend[Ii, Title];

Ii // MatrixForm

import numpy as np import pandas as pd from scipy import integrate def IT(f, a, b, n): h = (b - a)/n return sum([(f(a + i*h) + f(a + (i + 1)*h))*h/2 for i in range(int(n))]) a = 0.0 b = 1.5 def f(x): return 2 + 2*x + x**2 + np.sin(2*np.pi*x) + np.cos(2*np.pi*x/0.5) Itrue, error = integrate.quad(f, a, b) Ii = [] for i in range(8): ss = IT(f, a, b, 2**i) Ii.append([2**i, (b - a)/2**i, ss, Itrue, abs(Itrue - ss)]) Ii = pd.DataFrame(Ii, columns=["n", "h", "I_T", "I_true", "|E|"]) display(Ii)

The table shows that with 128 subdivisions, the value of the absolute error is 0.00011.

For the Romberg table, the code developed above is used to produce the following table:

View Mathematica CodeIT[f_, a_, b_, n_] := (h = (b - a)/n; Sum[(f[a + (i - 1)*h] + f[a + i*h])*h/2, {i, 1, n}])

RI[f_, a_, b_, k_, n1_] := (RItable = Table["N/A", {i, 1, k}, {j, 1, k}];

Do[RItable[[i, 1]] = IT[f, a, b, 2^(i - 1)*n1], {i, 1, k}];

Do[RItable[[j, ik]] = (2^(2 (ik - 1)) RItable[[j + 1, ik - 1]] - RItable[[j, ik - 1]])/(2^(2 (ik - 1)) - 1), {ik, 2, k}, {j, 1, k - ik + 1}];

ERtable = Table[(RItable[[1, i + 1]] - RItable[[1, i]])/ RItable[[1, i + 1]], {i, 1, k - 1}];

{RItable, ERtable})

a = 0.0;

b = 1.5;

k = 5;

n1 = 1;

f[x_] := 2 + 2 x + x^2 + Sin[2 Pi*x] + Cos[2 Pi*x/0.5]

Itrue = Integrate[f[x], {x, a, b}]

s = RI[f, a, b, k, n1];

s[[1]] // MatrixForm

s[[2]] // MatrixForm

ErrorTable = Table[If[RItable[[i, j]] == "N/A", "N/A", Abs[Itrue - RItable[[i, j]]]], {i, 1, Length[RItable]}, {j, 1, Length[RItable]}];

ErrorTable // MatrixForm

import numpy as np

from scipy import integrate

def IT(f, a, b, n):

h = (b - a)/n

return sum([(f(a + i*h) + f(a + (i + 1)*h))*h/2 for i in range(int(n))])

def RI(f, a, b, k, n1):

RItable = [[None for i in range(k)] for j in range(k)]

for i in range(k):

RItable[i][0] = IT(f, a, b, 2**i*n1)

for ik in range(1, k):

for j in range(k - ik):

RItable[j][ik] = (2**(2*ik)*RItable[j + 1][ik - 1] - RItable[j][ik - 1])/(2**(2*ik) - 1)

ERtable = [(RItable[0][i + 1] - RItable[0][i])/RItable[0][i + 1] for i in range(k - 1)]

return [RItable, ERtable]

a = 0.0

b = 1.5

k = 5

n1 = 1

def f(x): return 2 + 2*x + x**2 + np.sin(2*np.pi*x) + np.cos(2*np.pi*x/0.5)

Itrue, error = integrate.quad(f, a, b)

print("Itrue: ",Itrue)

s = RI(f, a, b, k, n1)

RItable = s[0]

display("RItable:",s[0])

display("ERtable:",s[1])

ErrorTable = [[None if RItable[i][j] == None else round(abs(Itrue - RItable[i][j]),5) for j in range(len(RItable))] for i in range(len(RItable))]

display("ErrorTable",ErrorTable)

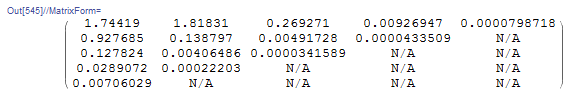

The corresponding errors are given in the following table:

Comparing the table produced for the traditional trapezoidal method and that produced by the Romberg’s method reveals how powerful the Romberg’s method is. The Romberg table utilizes only the first 5 entries (up to ![]() ) in the traditional trapezoidal method table and then using a few calculations according to the Romberg’s method equation, produces a value with an absolute error of 0.0000799 which is less than that with traditional trapezoidal rule with

) in the traditional trapezoidal method table and then using a few calculations according to the Romberg’s method equation, produces a value with an absolute error of 0.0000799 which is less than that with traditional trapezoidal rule with ![]() .

.

Lecture Video

Please note that the numbering of this lecture video is based on an old numbering system.