Introduction to Numerical Analysis: Finding Roots of Equations

Let ![]() be a function. One of the most basic problems in numerical analysis is to find the value

be a function. One of the most basic problems in numerical analysis is to find the value ![]() that would render

that would render ![]() . This value

. This value ![]() is called a root of the equation

is called a root of the equation ![]() . As an example, consider the function

. As an example, consider the function ![]() . Consider the equation

. Consider the equation ![]() . Clearly, there is only one root to this equation which is

. Clearly, there is only one root to this equation which is ![]() . Alternatively, consider

. Alternatively, consider ![]() defined as

defined as ![]() with

with ![]() . There are two analytical roots for the equation

. There are two analytical roots for the equation ![]() given by

given by

![]()

In this section we are going to present three classes of methods: graphical, bracketing, and open methods for finding roots of equations.

Graphical Methods

Graphical methods rely on a computational device that calculates the values of the function along an interval at specific steps, and then draws the graph of the function. By visual inspection, the analyst can identify the points at which the function crosses the ![]() axis. Graphical methods are very useful for one-dimensional problems, but for multi-dimensional problems it is not possible to visualize a graph of a function of multi-variables.

axis. Graphical methods are very useful for one-dimensional problems, but for multi-dimensional problems it is not possible to visualize a graph of a function of multi-variables.

Example

As an example, consider ![]() . We wish to find the values of

. We wish to find the values of ![]() (i.e., the roots) that would satisfy the equation

(i.e., the roots) that would satisfy the equation ![]() for

for ![]() . The fastest way is to plot the graph (even Microsoft Excel would serve as an appropriate tool here) and then visually inspect the locations where the graph crosses the

. The fastest way is to plot the graph (even Microsoft Excel would serve as an appropriate tool here) and then visually inspect the locations where the graph crosses the ![]() axis:

axis:

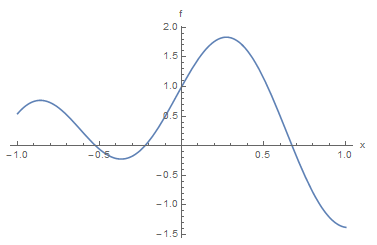

As shown in the above graph, the equation has three roots and just by visual inspection, the roots are around ![]() ,

, ![]() , and

, and ![]() . Of course these estimates are not that accurate for

. Of course these estimates are not that accurate for ![]() ,

, ![]() , and

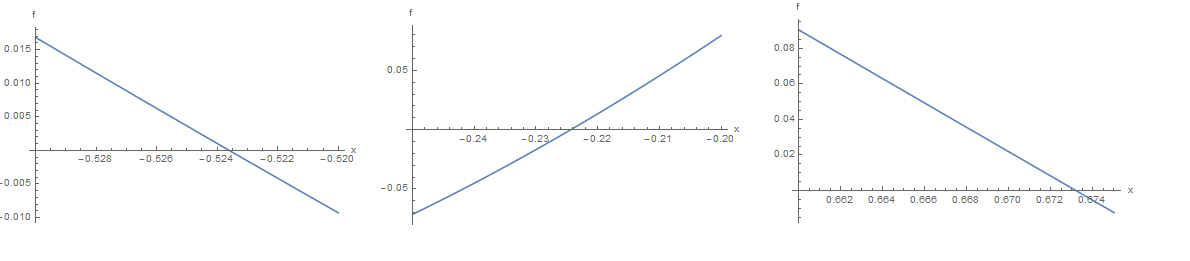

, and ![]() . Whether these estimates are acceptable or not, depends on the requirements of the problem. We can further zoom in using the software to try and get better estimates:

. Whether these estimates are acceptable or not, depends on the requirements of the problem. We can further zoom in using the software to try and get better estimates:

Visual inspection of the zoomed-in graphs provides the following estimates: ![]() ,

, ![]() , and

, and ![]() which are much better estimates since:

which are much better estimates since: ![]() ,

, ![]() , and

, and ![]()

Clear[x]

f = Sin[5 x] + Cos[2 x]

Plot[f, {x, -1, 1}, AxesLabel -> {"x", "f"}]

f /. x -> -0.525

f /. x -> -0.21

f /. x -> 0.675

Plot[f, {x, -0.53, -0.52}, AxesLabel -> {"x", "f"}]

Plot[f, {x, -0.25, -0.2}, AxesLabel -> {"x", "f"}]

Plot[f, {x, 0.66, 0.675}, AxesLabel -> {"x", "f"}]

f /. x -> -0.5235

f /. x -> -0.225

f /. x -> 0.6731

Bracketing Methods

An elementary observation from the previous method is that in most cases, the function changes signs around the roots of an equation. The bracketing methods rely on the intermediate value theorem.

Intermediate Value Theorem

Statement: Let ![]() be continuous and

be continuous and ![]() . Then,

. Then, ![]() such that

such that ![]() . The same applies if

. The same applies if ![]() .

.

The proof for the intermediate value theorem relies on the assumption of continuity of the function ![]() . Intuitively, because the function

. Intuitively, because the function ![]() is continuous, then for the values of

is continuous, then for the values of ![]() to change from

to change from ![]() to

to ![]() it has to pass by every possible value between

it has to pass by every possible value between ![]() and

and ![]() . The consequence of the theorem is that if the function

. The consequence of the theorem is that if the function ![]() is such that

is such that ![]() , then, there is

, then, there is ![]() such that

such that ![]() . We can proceed to find

. We can proceed to find ![]() iteratively using either the bisection method or the false position method.

iteratively using either the bisection method or the false position method.

Bisection Method

In the bisection method, if ![]() , an estimate for the root of the equation

, an estimate for the root of the equation ![]() can be found as the average of

can be found as the average of ![]() and

and ![]() :

:

![]()

Upon evaluating ![]() , the next iteration would be to set either

, the next iteration would be to set either ![]() or

or ![]() such that for the next iteration the root

such that for the next iteration the root ![]() is between

is between ![]() and

and ![]() . The following describes an algorithm for the bisection method given

. The following describes an algorithm for the bisection method given ![]() ,

, ![]() ,

, ![]() , and maximum number of iterations:

, and maximum number of iterations:

Step 1: Evaluate ![]() and

and ![]() to ensure that

to ensure that ![]() . Otherwise, exit with an error.

. Otherwise, exit with an error.

Step 2: Calculate the value of the root in iteration ![]() as

as ![]() . Check which of the following applies:

. Check which of the following applies:

- If

, then the root has been found, the value of the error

, then the root has been found, the value of the error  . Exit.

. Exit. - If

, then for the next iteration,

, then for the next iteration,  is bracketed between

is bracketed between  and

and  . The value of

. The value of  .

. - If

, then for the next iteration,

, then for the next iteration,  is bracketed between

is bracketed between  and

and  . The value of

. The value of  .

.

Step 3: Set ![]() . If

. If ![]() reaches the maximum number of iterations or if

reaches the maximum number of iterations or if ![]() , then the iterations are stopped. Otherwise, return to step 2 with the new interval

, then the iterations are stopped. Otherwise, return to step 2 with the new interval ![]() and

and ![]() .

.

Example

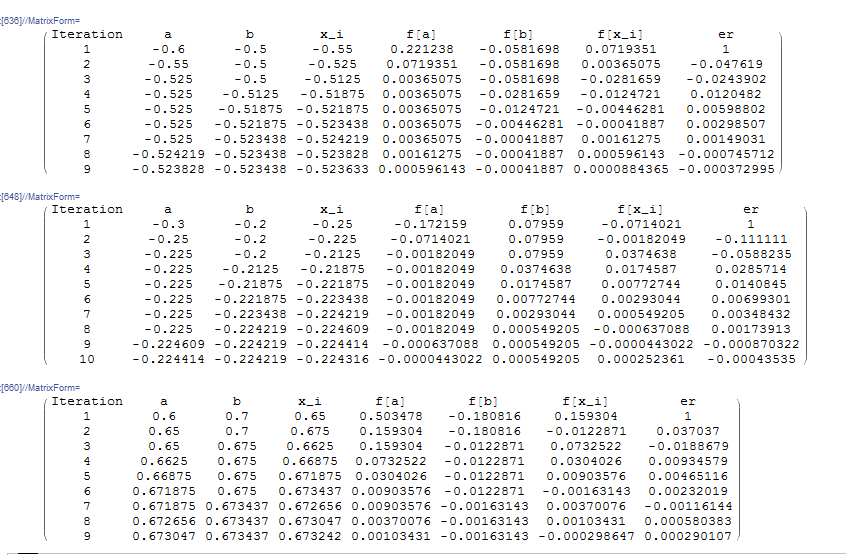

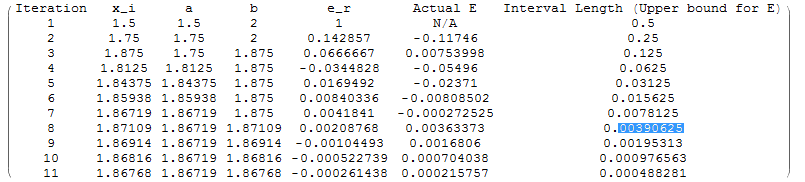

Setting ![]() and applying this process to

and applying this process to ![]() with

with ![]() and

and ![]() yields the estimate

yields the estimate ![]() after 9 iterations with

after 9 iterations with ![]() as shown below. Similarly, applying this process to

as shown below. Similarly, applying this process to ![]() with

with ![]() and

and ![]() yields the estimate

yields the estimate ![]() after 10 iterations with

after 10 iterations with ![]() while applying this process to

while applying this process to ![]() with

with ![]() and

and ![]() yields the estimate

yields the estimate ![]() after 9 iterations with

after 9 iterations with ![]() :

:

Clear[f, x, ErrorTable, ei]

f[x_] := Sin[5 x] + Cos[2 x]

(*The following function returns x_i and the new interval (a,b) along with an error note. The order of the output is {xi,new a, new b, note}*)

bisec2[f_, a_, b_] := (xi = (a + b)/2; Which[

f[a]*f[b] > 0, {xi, a, b, "Root Not Bracketed"},

f[xi] == 0, {xi, a, b, "Root Found"},

f[xi]*f[a] < 0, {xi, a, xi, "Root between a and xi"},

f[xi]*f[b] < 0, {xi, xi, b, "Root between xi and b"}])

(*Problem Setup*)

MaxIter = 20;

eps = 0.0005;

(*First root*)

ErrorTable = {1};

ErrorNoteTable = {};

atable = {-0.6};

btable = {-0.5};

xtable = {};

i = 1;

Stopcode = "NoStopping";

While[And[i <= MaxIter, Stopcode == "NoStopping"],

ri = bisec2[f, atable[[i]], btable[[i]]];

If[ri[[4]] == "Root Not Bracketed", xtable = Append[xtable, ri[[1]]];ErrorNoteTable = Append[ErrorNoteTable, ri[[4]]]; Break[]];

If[ri[[4]] == "Root Found", xtable = Append[xtable, ri[[1]]]; ErrorTable = Append[ErrorTable, 0];ErrorNoteTable = Append[ErrorNoteTable, ri[[4]]]; Break[]];

atable = Append[atable, ri[[2]]];

btable = Append[btable, ri[[3]]];

xtable = Append[xtable, ri[[1]]];

ErrorNoteTable = Append[ErrorNoteTable, ri[[4]]];

If[i != 1,

ei = (xtable[[i]] - xtable[[i - 1]])/xtable[[i]];

ErrorTable = Append[ErrorTable, ei]];

If[Abs[ErrorTable[[i]]] > eps, Stopcode = "NoStopping", Stopcode = "Stop"];

i++]

Title = {"Iteration", "a", "b", "x_i", "f[a]", "f[b]", "f[x_i]", "er", "ErrorNote"};

T2 = Table[{i, atable[[i]], btable[[i]], xtable[[i]], f[atable[[i]]], f[btable[[i]]], f[xtable[[i]]], ErrorTable[[i]], ErrorNoteTable[[i]]}, {i, Length[xtable]}];

T2 = Prepend[T2, Title];

T2 // MatrixForm

(*Second Root*)

ErrorTable = {1};

ErrorNoteTable = {};

atable = {-0.3};

btable = {-0.2};

xtable = {};

i = 1;

Stopcode = "NoStopping";

While[And[i <= MaxIter, Stopcode == "NoStopping"],

ri = bisec2[f, atable[[i]], btable[[i]]];

If[ri[[4]] == "Root Not Bracketed", xtable = Append[xtable, ri[[1]]];ErrorNoteTable = Append[ErrorNoteTable, ri[[4]]]; Break[]];

If[ri[[4]] == "Root Found", xtable = Append[xtable, ri[[1]]]; ErrorTable = Append[ErrorTable, 0];ErrorNoteTable = Append[ErrorNoteTable, ri[[4]]]; Break[]];

atable = Append[atable, ri[[2]]];

btable = Append[btable, ri[[3]]];

xtable = Append[xtable, ri[[1]]];

ErrorNoteTable = Append[ErrorNoteTable, ri[[4]]];

If[i != 1,

ei = (xtable[[i]] - xtable[[i - 1]])/xtable[[i]];

ErrorTable = Append[ErrorTable, ei]];

If[Abs[ErrorTable[[i]]] > eps, Stopcode = "NoStopping", Stopcode = "Stop"];

i++]

Title = {"Iteration", "a", "b", "x_i", "f[a]", "f[b]", "f[x_i]", "er", "ErrorNote"};

T2 = Table[{i, atable[[i]], btable[[i]], xtable[[i]], f[atable[[i]]], f[btable[[i]]], f[xtable[[i]]], ErrorTable[[i]], ErrorNoteTable[[i]]}, {i, Length[xtable]}];

T2 = Prepend[T2, Title];

T2 // MatrixForm

(*Third Root*)

ErrorTable = {1};

ErrorNoteTable = {};

atable = {0.6};

btable = {0.7};

xtable = {};

i = 1;

Stopcode = "NoStopping";

While[And[i <= MaxIter, Stopcode == "NoStopping"],

ri = bisec2[f, atable[[i]], btable[[i]]];

If[ri[[4]] == "Root Not Bracketed", xtable = Append[xtable, ri[[1]]];ErrorNoteTable = Append[ErrorNoteTable, ri[[4]]]; Break[]];

If[ri[[4]] == "Root Found", xtable = Append[xtable, ri[[1]]]; ErrorTable = Append[ErrorTable, 0];ErrorNoteTable = Append[ErrorNoteTable, ri[[4]]]; Break[]];

atable = Append[atable, ri[[2]]];

btable = Append[btable, ri[[3]]];

xtable = Append[xtable, ri[[1]]];

ErrorNoteTable = Append[ErrorNoteTable, ri[[4]]];

If[i != 1,

ei = (xtable[[i]] - xtable[[i - 1]])/xtable[[i]];

ErrorTable = Append[ErrorTable, ei]];

If[Abs[ErrorTable[[i]]] > eps, Stopcode = "NoStopping", Stopcode = "Stop"];

i++]

Title = {"Iteration", "a", "b", "x_i", "f[a]", "f[b]", "f[x_i]", "er", "ErrorNote"};

T2 = Table[{i, atable[[i]], btable[[i]], xtable[[i]], f[atable[[i]]], f[btable[[i]]], f[xtable[[i]]], ErrorTable[[i]], ErrorNoteTable[[i]]}, {i, Length[xtable]}];

T2 = Prepend[T2, Title];

T2 // MatrixForm

The following code can be used to implement the bisection method in MATLAB. For the sake of demonstration, it finds the roots of the function in above example. The example function is defined in another file

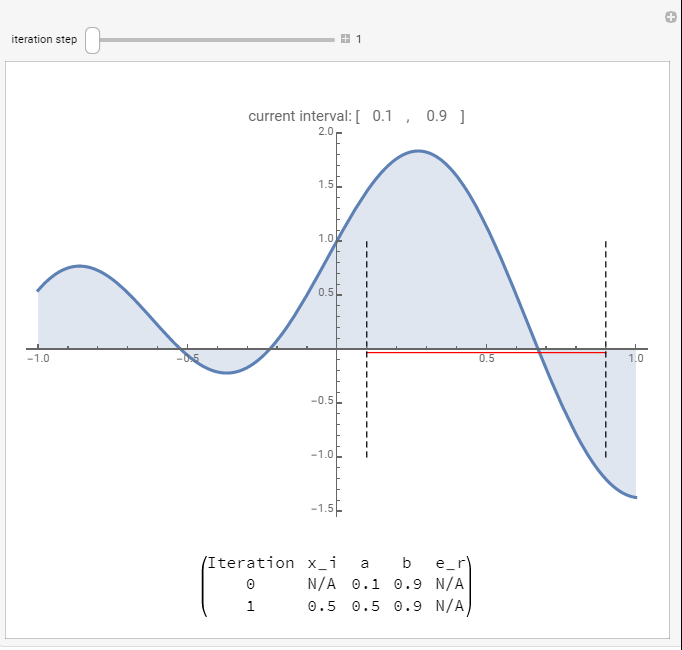

The following tool illustrates this process for ![]() and

and ![]() . Use the slider to view the process after each iteration. In the first iteration, the interval

. Use the slider to view the process after each iteration. In the first iteration, the interval ![]() .

. ![]() , so,

, so, ![]() . In the second iteration, the interval becomes

. In the second iteration, the interval becomes ![]() and the new estimate

and the new estimate ![]() . The relative approximate error in the estimate

. The relative approximate error in the estimate ![]() . You can view the process to see how it converges after 12 iterations.

. You can view the process to see how it converges after 12 iterations.

Error Estimation

If ![]() is the true value of the root and

is the true value of the root and ![]() is the estimate, then, the number of iterations

is the estimate, then, the number of iterations ![]() needed to ensure that the absolute value of the error

needed to ensure that the absolute value of the error ![]() is less than or equal to a certain value can be easily obtained. Let

is less than or equal to a certain value can be easily obtained. Let ![]() be the length of the original interval used. The first estimate

be the length of the original interval used. The first estimate ![]() and so, in the next iteration, the interval where the root is contained has a length of

and so, in the next iteration, the interval where the root is contained has a length of ![]() . As the process evolves, the interval for the iteration number

. As the process evolves, the interval for the iteration number ![]() has a length of

has a length of ![]() . Since the true value exists in an interval of length

. Since the true value exists in an interval of length ![]() , the absolute value of the error is such that:

, the absolute value of the error is such that:

![]()

Therefore, for a desired estimate of the absolute value of the error, say ![]() , the number of iterations required is:

, the number of iterations required is:

![]()

Example

As an example, consider ![]() , if we wish to find the root of the equation

, if we wish to find the root of the equation ![]() in the interval

in the interval ![]() with an absolute error less than or equal to 0.004, the number of iterations required is 8:

with an absolute error less than or equal to 0.004, the number of iterations required is 8:

![]()

The actual root with 10 significant digits is ![]() .

.

Using the process above, after the first iteration, ![]() and so, the root lies between

and so, the root lies between ![]() and

and ![]() . So, the length of the interval is equal to 0.5 and the error in the estimate is less than 0.5. The length of the interval after iteration 8 is equal to 0.0039 and so the error in the estimate is less than 0.0039. It should be noted however that the actual error was less than this upper bound after the seventh iteration.

. So, the length of the interval is equal to 0.5 and the error in the estimate is less than 0.5. The length of the interval after iteration 8 is equal to 0.0039 and so the error in the estimate is less than 0.0039. It should be noted however that the actual error was less than this upper bound after the seventh iteration.

Clear[f, x, tt, ErrorTable, nn];

f[x_] := x^3 + x^2 - 10;

bisec[f_, a_, b_] := If[f[a] f[b] <= 0, If[f[(a + b)/2] f[a] <= 0, {(a + b)/2, a, (a + b)/2}, If[f[(a + b)/2] f[b] <= 0, {(a + b)/2, (a + b)/2, b}]], "Error, root is not bracketed"];

(*Exact Solution*)

t = Solve[x^3 + x^2 - 10 == 0, x]

Vt = N[x /. t[[1]], 10]

(*Problem Setup*)

MaxIter = 20;

eps = 0.0005;

(*First root*)

x = {bisec[f, 1., 2]};

ErrorTable = {1};

ErrorTable2 = {"N/A"};

IntervalLength = {0.5};

i = 2;

While[And[i <= MaxIter, Abs[ErrorTable[[i - 1]]] > eps],

ri = bisec[f, x[[i - 1, 2]], x[[i - 1, 3]]];

If[ri=="Error, root is not bracketed",ErrorTable[[1]]="Error, root is not bracketed";Break[]];

x = Append[x, ri]; ei = (x[[i, 1]] - x[[i - 1, 1]])/x[[i, 1]]; e2i = x[[i, 1]] - Vt; ErrorTable = Append[ErrorTable, ei]; IntervalLength = Append[IntervalLength, (x[[i - 1, 3]] - x[[i - 1, 2]])/2]; ErrorTable2 = Append[ErrorTable2, e2i]; i++]

x // MatrixForm

ErrorTable // MatrixForm

Title = {"Iteration", "x_i", "a", "b", "e_r", "Actual E", "Interval Length (Upper bound for E)"};

T2 = Table[{i, x[[i, 1]], x[[i, 2]], x[[i, 3]], ErrorTable[[i]], ErrorTable2[[i]], IntervalLength[[i]]}, {i, 1, Length[x]}];

T2 = Prepend[T2, Title];

T2 // MatrixForm

The procedure for solving the above example in MATLAB is available in the following files. The polynomial function is defined in a separate file.

False Position Method

In the false position method, the new estimate ![]() at iteration

at iteration ![]() is obtained by considering the linear function passing through the two points

is obtained by considering the linear function passing through the two points ![]() and

and ![]() . The point of intersection of this line with the

. The point of intersection of this line with the ![]() axis can be obtained using one of the following formulas:

axis can be obtained using one of the following formulas:

![]()

Upon evaluating ![]() , the next iteration would be to set either

, the next iteration would be to set either ![]() or

or ![]() such that for the next iteration the root

such that for the next iteration the root ![]() is between

is between ![]() and

and ![]() . The following describes an algorithm for the false position method method given

. The following describes an algorithm for the false position method method given ![]() ,

, ![]() , “varepsilon_2”, and maximum number of iterations:

, “varepsilon_2”, and maximum number of iterations:

Step 1: Evaluate ![]() and

and ![]() to ensure that

to ensure that ![]() . Otherwise exit with an error.

. Otherwise exit with an error.

Step 2: Calculate the value of the root in iteration ![]() as

as ![]() . Check which of the following applies:

. Check which of the following applies:

- If

, then the root has been found, the value of the error

, then the root has been found, the value of the error  . Exit.

. Exit. - If

, then for the next iteration,

, then for the next iteration,  is bracketed between

is bracketed between  and

and  . The value of

. The value of  .

. - If

, then for the next iteration,

, then for the next iteration,  is bracketed between

is bracketed between  and

and  . The value of

. The value of  .

.

Step 3: If ![]() reaches the maximum number of iterations or if

reaches the maximum number of iterations or if ![]() , then the iterations are stopped. Otherwise, return to step 2.

, then the iterations are stopped. Otherwise, return to step 2.

Example

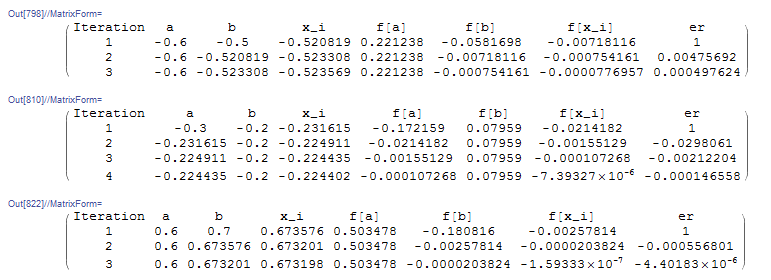

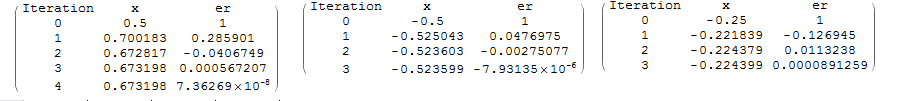

Setting ![]() and applying this process to

and applying this process to ![]() with

with ![]() and

and ![]() yields the estimate

yields the estimate ![]() after 3 iterations with

after 3 iterations with ![]() as shown below. Similarly, applying this process to

as shown below. Similarly, applying this process to ![]() with

with ![]() and

and ![]() yields the estimate

yields the estimate ![]() after 4 iterations with

after 4 iterations with ![]() while applying this process to

while applying this process to ![]() with

with ![]() and

and ![]() yields the estimate

yields the estimate ![]() after 3 iterations with

after 3 iterations with ![]() :

:

Clear[f, x, ErrorTable, ei]

f[x_] := Sin[5 x] + Cos[2 x]

(*The following function returns x_i and the new interval (a,b) along with an error note. The order of the output is {xi,new a, new b, note}*)

falseposition[f_, a_, b_] := (xi = (a*f[b]-b*f[a])/(f[b]-f[a]); Which[

f[a]*f[b] > 0, {xi, a, b, "Root Not Bracketed"},

f[xi] == 0, {xi, a, b, "Root Found"},

f[xi]*f[a] < 0, {xi, a, xi, "Root between a and xi"},

f[xi]*f[b] < 0, {xi, xi, b, "Root between xi and b"}])

(*Problem Setup*)

MaxIter = 20;

eps = 0.0005;

(*First root*)

ErrorTable = {1};

ErrorNoteTable = {};

atable = {-0.6};

btable = {-0.5};

xtable = {};

i = 1;

Stopcode = "NoStopping";

While[And[i <= MaxIter, Stopcode == "NoStopping"],

ri = falseposition[f, atable[[i]], btable[[i]]];

If[ri[[4]] == "Root Not Bracketed", xtable = Append[xtable, ri[[1]]];ErrorNoteTable = Append[ErrorNoteTable, ri[[4]]]; Break[]];

If[ri[[4]] == "Root Found", xtable = Append[xtable, ri[[1]]]; ErrorTable = Append[ErrorTable, 0];ErrorNoteTable = Append[ErrorNoteTable, ri[[4]]]; Break[]];

atable = Append[atable, ri[[2]]];

btable = Append[btable, ri[[3]]];

xtable = Append[xtable, ri[[1]]];

ErrorNoteTable = Append[ErrorNoteTable, ri[[4]]];

If[i != 1,

ei = (xtable[[i]] - xtable[[i - 1]])/xtable[[i]];

ErrorTable = Append[ErrorTable, ei]];

If[Abs[ErrorTable[[i]]] > eps, Stopcode = "NoStopping", Stopcode = "Stop"];

i++]

Title = {"Iteration", "a", "b", "x_i", "f[a]", "f[b]", "f[x_i]", "er", "ErrorNote"};

T2 = Table[{i, atable[[i]], btable[[i]], xtable[[i]], f[atable[[i]]], f[btable[[i]]], f[xtable[[i]]], ErrorTable[[i]], ErrorNoteTable[[i]]}, {i, Length[xtable]}];

T2 = Prepend[T2, Title];

T2 // MatrixForm

(*Second Root*)

ErrorTable = {1};

ErrorNoteTable = {};

atable = {-0.3};

btable = {-0.2};

xtable = {};

i = 1;

Stopcode = "NoStopping";

While[And[i <= MaxIter, Stopcode == "NoStopping"],

ri = falseposition[f, atable[[i]], btable[[i]]];

If[ri[[4]] == "Root Not Bracketed", xtable = Append[xtable, ri[[1]]];ErrorNoteTable = Append[ErrorNoteTable, ri[[4]]]; Break[]];

If[ri[[4]] == "Root Found", xtable = Append[xtable, ri[[1]]]; ErrorTable = Append[ErrorTable, 0];ErrorNoteTable = Append[ErrorNoteTable, ri[[4]]]; Break[]];

atable = Append[atable, ri[[2]]];

btable = Append[btable, ri[[3]]];

xtable = Append[xtable, ri[[1]]];

ErrorNoteTable = Append[ErrorNoteTable, ri[[4]]];

If[i != 1,

ei = (xtable[[i]] - xtable[[i - 1]])/xtable[[i]];

ErrorTable = Append[ErrorTable, ei]];

If[Abs[ErrorTable[[i]]] > eps, Stopcode = "NoStopping", Stopcode = "Stop"];

i++]

Title = {"Iteration", "a", "b", "x_i", "f[a]", "f[b]", "f[x_i]", "er", "ErrorNote"};

T2 = Table[{i, atable[[i]], btable[[i]], xtable[[i]], f[atable[[i]]], f[btable[[i]]], f[xtable[[i]]], ErrorTable[[i]], ErrorNoteTable[[i]]}, {i, Length[xtable]}];

T2 = Prepend[T2, Title];

T2 // MatrixForm

(*Third Root*)

ErrorTable = {1};

ErrorNoteTable = {};

atable = {0.6};

btable = {0.7};

xtable = {};

i = 1;

Stopcode = "NoStopping";

While[And[i <= MaxIter, Stopcode == "NoStopping"],

ri = falseposition[f, atable[[i]], btable[[i]]];

If[ri[[4]] == "Root Not Bracketed", xtable = Append[xtable, ri[[1]]];ErrorNoteTable = Append[ErrorNoteTable, ri[[4]]]; Break[]];

If[ri[[4]] == "Root Found", xtable = Append[xtable, ri[[1]]]; ErrorTable = Append[ErrorTable, 0];ErrorNoteTable = Append[ErrorNoteTable, ri[[4]]]; Break[]];

atable = Append[atable, ri[[2]]];

btable = Append[btable, ri[[3]]];

xtable = Append[xtable, ri[[1]]];

ErrorNoteTable = Append[ErrorNoteTable, ri[[4]]];

If[i != 1,

ei = (xtable[[i]] - xtable[[i - 1]])/xtable[[i]];

ErrorTable = Append[ErrorTable, ei]];

If[Abs[ErrorTable[[i]]] > eps, Stopcode = "NoStopping", Stopcode = "Stop"];

i++]

Title = {"Iteration", "a", "b", "x_i", "f[a]", "f[b]", "f[x_i]", "er", "ErrorNote"};

T2 = Table[{i, atable[[i]], btable[[i]], xtable[[i]], f[atable[[i]]], f[btable[[i]]], f[xtable[[i]]], ErrorTable[[i]], ErrorNoteTable[[i]]}, {i, Length[xtable]}];

T2 = Prepend[T2, Title];

T2 // MatrixForm

The procedure for implementing the false position root finding algorithm in MATLAB is available in the following files. The example function is defined in a separate file.

The following tool illustrates this process for ![]() and

and ![]() . Use the slider to view the process after each iteration. In the first iteration, the interval

. Use the slider to view the process after each iteration. In the first iteration, the interval ![]() .

. ![]() , so,

, so, ![]() . In the second iteration, the interval becomes

. In the second iteration, the interval becomes ![]() and the new estimate

and the new estimate ![]() . The relative approximate error in the estimate

. The relative approximate error in the estimate ![]() . You can view the process to see how it converges after very few iterations.

. You can view the process to see how it converges after very few iterations.

Open Methods

Open methods do not rely on having the root squeezed between two values, but rather rely on an initial guess and then apply an iterative process to get better estimates for the root. Open methods are usually faster in convergence if they converge, but they don’t always converge. For multi-dimensions, i.e., for solving multiple nonlinear equations, open methods are easier to implement than bracketing methods which cannot be easily extended to multi-dimensions.

Fixed-Point Iteration Method

Let ![]() . A fixed point of

. A fixed point of ![]() is defined as

is defined as ![]() such that

such that ![]() . If

. If ![]() , then a fixed point of

, then a fixed point of ![]() is the intersection of the graphs of the two functions

is the intersection of the graphs of the two functions ![]() and

and ![]() .

.

The fixed-point iteration method relies on replacing the expression ![]() with the expression

with the expression ![]() . Then, an initial guess for the root

. Then, an initial guess for the root ![]() is assumed and input as an argument for the function

is assumed and input as an argument for the function ![]() . The output

. The output ![]() is then the estimate

is then the estimate ![]() . The process is then iterated until the output

. The process is then iterated until the output ![]() . The following is the algorithm for the fixed-point iteration method. Assuming

. The following is the algorithm for the fixed-point iteration method. Assuming ![]() ,

, ![]() , and maximum number of iterations

, and maximum number of iterations ![]() :

:

Set ![]() , and calculate

, and calculate ![]() and compare with

and compare with ![]() . If

. If ![]() or if

or if ![]() , then stop the procedure, otherwise, repeat.

, then stop the procedure, otherwise, repeat.

The Babylonian method for finding roots described in the introduction section is a prime example of the use of this method. If we seek to find the solution for the equation ![]() or

or ![]() , then a fixed-point iteration scheme can be implemented by writing this equation in the form:

, then a fixed-point iteration scheme can be implemented by writing this equation in the form:

![]()

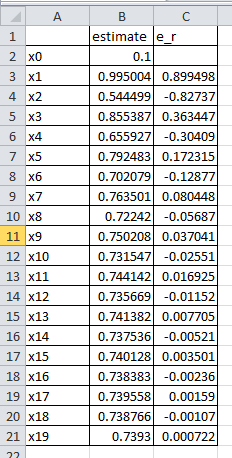

Example

Consider the function ![]() . We wish to find the root of the equation

. We wish to find the root of the equation ![]() , i.e.,

, i.e., ![]() . The expression can be rearranged to the fixed-point iteration form

. The expression can be rearranged to the fixed-point iteration form ![]() and an initial guess

and an initial guess ![]() can be used. The tolerance

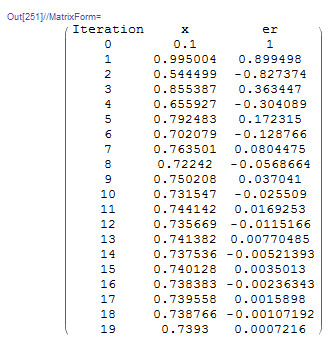

can be used. The tolerance ![]() is set to 0.001. The following is the Microsoft Excel table showing that the tolerance is achieved after 19 iterations:

is set to 0.001. The following is the Microsoft Excel table showing that the tolerance is achieved after 19 iterations:

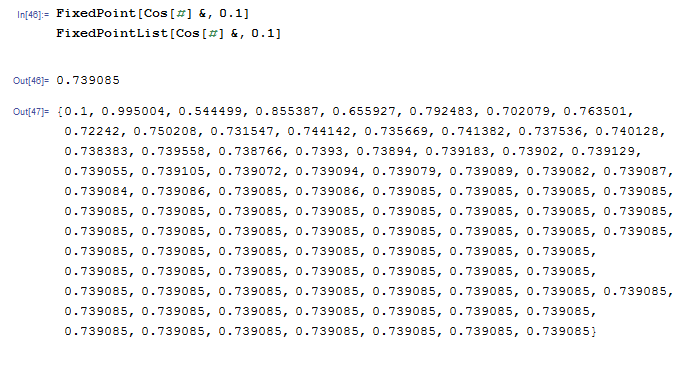

Mathematica has a built-in algorithm for the fixed-point iteration method. The function “FixedPoint[f,Expr,n]” applies the fixed-point iteration method ![]() with the initial guess being “Expr” with a maximum number of iterations “n”. As well, the function “FixedPointList[f,Expr,n]” returns the list of applying the function “n” times. If “n” is omitted, then the software applies the fixed-point iteration method until convergence is achieved. Here is a snapshot of the code and the output for the fixed-point iteration

with the initial guess being “Expr” with a maximum number of iterations “n”. As well, the function “FixedPointList[f,Expr,n]” returns the list of applying the function “n” times. If “n” is omitted, then the software applies the fixed-point iteration method until convergence is achieved. Here is a snapshot of the code and the output for the fixed-point iteration ![]() . The software finds the solution

. The software finds the solution ![]() . Compare the list below with the Microsoft Excel sheet above.

. Compare the list below with the Microsoft Excel sheet above.

Alternatively, simple code can be written in Mathematica with the following output

View Mathematica Code

x = {0.1};

er = {1};

es = 0.001;

MaxIter = 100;

i = 1;

While[And[i <= MaxIter, Abs[er[[i]]] > es], xnew = Cos[x[[i]]]; x = Append[x, xnew]; ernew = (xnew - x[[i]])/xnew; er = Append[er, ernew]; i++];

T = Length[x];

SolutionTable = Table[{i - 1, x[[i]], er[[i]]}, {i, 1, T}];

SolutionTable1 = {"Iteration", "x", "er"};

T = Prepend[SolutionTable, SolutionTable1];

T // MatrixForm

The following MATLAB code runs the fixed-point iteration method to find the root of a function ![]() with initial guess

with initial guess ![]() . The value of the estimate and approximate relative error at each iteration is displayed in the command window. Additionally, two plots are produced to visualize how the iterations and the errors progress. Your function should be written in the form

. The value of the estimate and approximate relative error at each iteration is displayed in the command window. Additionally, two plots are produced to visualize how the iterations and the errors progress. Your function should be written in the form ![]() . Then call the fixed point iteration function with fixedpointfun2(@(x) g(x), x0). For example, try fixedpointfun2(@(x) cos(x), 0.1)

. Then call the fixed point iteration function with fixedpointfun2(@(x) g(x), x0). For example, try fixedpointfun2(@(x) cos(x), 0.1)

Convergence

The fixed-point iteration method converges easily if in the region of interest we have ![]() . Otherwise, it does not converge. Here is an example where the fixed-point iteration method fails to converge.

. Otherwise, it does not converge. Here is an example where the fixed-point iteration method fails to converge.

Example

Consider the function ![]() . To find the root of the equation

. To find the root of the equation ![]() , the expression can be converted into the fixed-point iteration form as:

, the expression can be converted into the fixed-point iteration form as:

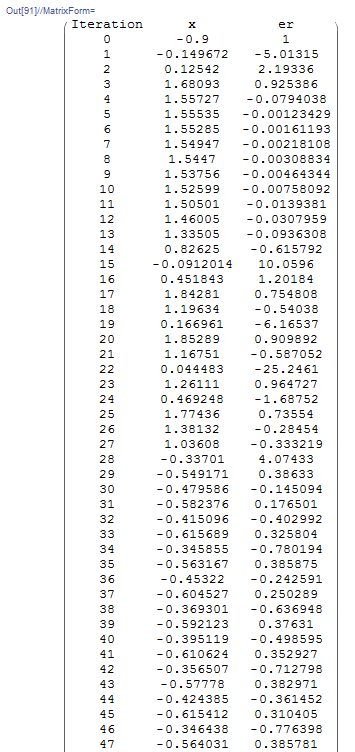

![]() . Implementing the fixed-point iteration procedure shows that this expression almost never converges but oscillates:

. Implementing the fixed-point iteration procedure shows that this expression almost never converges but oscillates:

Clear[x, g]

x = {-0.9};

er = {1};

es = 0.001;

MaxIter = 100;

i = 1;

g[x_] := (Sin[5 x] + Cos[2 x]) + x

While[And[i <= MaxIter, Abs[er[[i]]] > es],xnew = g[x[[i]]]; x = Append[x, xnew]; ernew = (xnew - x[[i]])/xnew;er = Append[er, ernew]; i++];

T = Length[x];

SolutionTable = Table[{i - 1, x[[i]], er[[i]]}, {i, 1, T}];

SolutionTable1 = {"Iteration", "x", "er"};

T = Prepend[SolutionTable, SolutionTable1];

T // MatrixForm

The following is the output table showing the first 45 iterations. The value of the error oscillates and never decreases:

The expression ![]() can be converted to different forms

can be converted to different forms ![]() . For example, assuming

. For example, assuming ![]() :

:

![]()

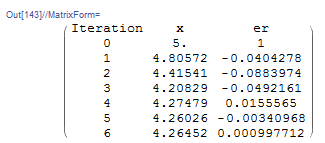

If this expression is used, the fixed-point iteration method does converge depending on the choice of ![]() . For example, setting

. For example, setting ![]() gives the estimate for the root

gives the estimate for the root ![]() with the required accuracy:

with the required accuracy:

Clear[x, g]

x = {5.};

er = {1};

es = 0.001;

MaxIter = 100;

i = 1;

g[x_] := (Sin[5 x] + Cos[2 x])/x + x

While[And[i <= MaxIter, Abs[er[[i]]] > es], xnew = g[x[[i]]]; x = Append[x, xnew]; ernew = (xnew - x[[i]])/xnew; er = Append[er, ernew]; i++];

T = Length[x];

SolutionTable = Table[{i - 1, x[[i]], er[[i]]}, {i, 1, T}];

SolutionTable1 = {"Iteration", "x", "er"};

T = Prepend[SolutionTable, SolutionTable1];

T // MatrixForm

Obviously, unlike the bracketing methods, this open method cannot find a root in a specific interval. The root is a function of the initial guess ![]() and the form

and the form ![]() , but the user has no other way of forcing the root to be within a specific interval. It is very difficult, for example, to use the fixed-point iteration method to find the roots of the expression

, but the user has no other way of forcing the root to be within a specific interval. It is very difficult, for example, to use the fixed-point iteration method to find the roots of the expression ![]() in the interval

in the interval ![]() .

.

Analysis of Convergence of the Fixed-Point Method

The objective of the fixed-point iteration method is to find the true value ![]() that satisfies

that satisfies ![]() . In each iteration we have the estimate

. In each iteration we have the estimate ![]() . Using the mean value theorem, we can write the following expression:

. Using the mean value theorem, we can write the following expression:

![]()

for some ![]() in the interval between

in the interval between ![]() and the true value

and the true value ![]() . Replacing

. Replacing ![]() and

and ![]() in the above expression yields:

in the above expression yields:

![]()

The error after iteration ![]() is equal to

is equal to ![]() while that after iteration

while that after iteration ![]() is equal to

is equal to ![]() . Therefore, the above expression yields:

. Therefore, the above expression yields:

![]()

For the error to reduce after each iteration, the first derivative of ![]() , namely

, namely ![]() , should be bounded by 1 in the region of interest (around the required root):

, should be bounded by 1 in the region of interest (around the required root):

![]()

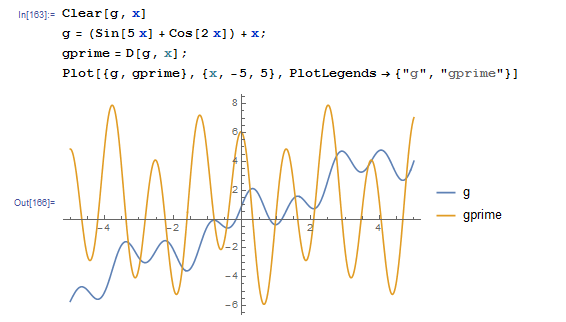

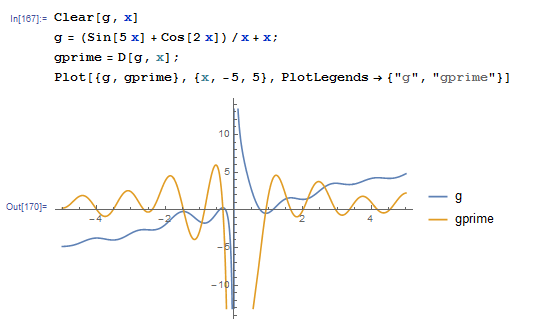

We can now try to understand why, in the previous example, the expression ![]() does not converge. When we plot

does not converge. When we plot ![]() and

and ![]() we see that

we see that ![]() oscillates rapidly with values higher than 1:

oscillates rapidly with values higher than 1:

On the other hand, the expression ![]() converges for roots that are away from zero. When we plot

converges for roots that are away from zero. When we plot ![]() and

and ![]() we see that the oscillations in

we see that the oscillations in ![]() decrease when

decrease when ![]() is away from zero and

is away from zero and ![]() is bounded by 1 in some regions:

is bounded by 1 in some regions:

Example

In this example, we will visualize the example of finding the root of the expression ![]() . There are three different forms for the fixed-point iteration scheme:

. There are three different forms for the fixed-point iteration scheme:

![Rendered by QuickLaTeX.com \[\begin{split} x&=g_1(x)=\frac{10}{x^3-1}\\ x&=g_2(x)=(x+10)^{1/4}\\ x&=g_3(x)=\frac{(x+10)^{1/2}}{x} \end{split} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-f0ad81effc087eae88fc0e17dd551ffa_l3.png)

To visualize the convergence, notice that if we plot the separate graphs of the function ![]() and the function

and the function ![]() , then, the root is the point of intersection when

, then, the root is the point of intersection when ![]() . For

. For ![]() , the slope

, the slope ![]() is not bounded by 1 and so, the scheme diverges no matter what

is not bounded by 1 and so, the scheme diverges no matter what ![]() is. Here we start with

is. Here we start with ![]() :

:

For ![]() , the slope

, the slope ![]() is bounded by 1 and so, the scheme converges really fast no matter what

is bounded by 1 and so, the scheme converges really fast no matter what ![]() is. Here we start with

is. Here we start with ![]() :

:

For ![]() , the slope

, the slope ![]() is bounded by 1 and so, the scheme converges but slowly. Here we start with

is bounded by 1 and so, the scheme converges but slowly. Here we start with ![]() :

:

The following is the Mathematica code used to generate one of the tools above:

View Mathematica Code

Manipulate[

x = {5.};

er = {1};

es = 0.001;

MaxIter = 20;

i = 1;

g[x_] := (x + 10)^(1/4);

While[And[i <= MaxIter, Abs[er[[i]]] > es],xnew = g[x[[i]]]; x = Append[x, xnew]; ernew = (xnew - x[[i]])/xnew;er = Append[er, ernew]; i++];

T1 = Length[x];

SolutionTable = Table[{i - 1, x[[i]], er[[i]]}, {i, 1, T1}];

SolutionTable1 = {"Iteration", "x", "er"};

T = Prepend[SolutionTable, SolutionTable1];

LineTable1 = Table[{{T[[i, 2]], T[[i, 2]]}, {T[[i, 2]], g[T[[i, 2]]]}}, {i, 2, n}];

LineTable2 = Table[{{T[[i + 1, 2]], T[[i + 1, 2]]}, {T[[i, 2]], g[T[[i, 2]]]}}, {i, 2, n - 1}];

Grid[{{Plot[{x, g[x]}, {x, 0, 5}, PlotLegends -> {"x", "g3(x)"},ImageSize -> Medium, Epilog -> {Dashed, Line[LineTable1], Line[LineTable2]}]}, {Row[{"Iteration=", n - 2, " x_n=", T[[n, 2]], " g(x_n)=", g[T[[n, 2]]]}]}}], {n, 2, Length[T], 1}]

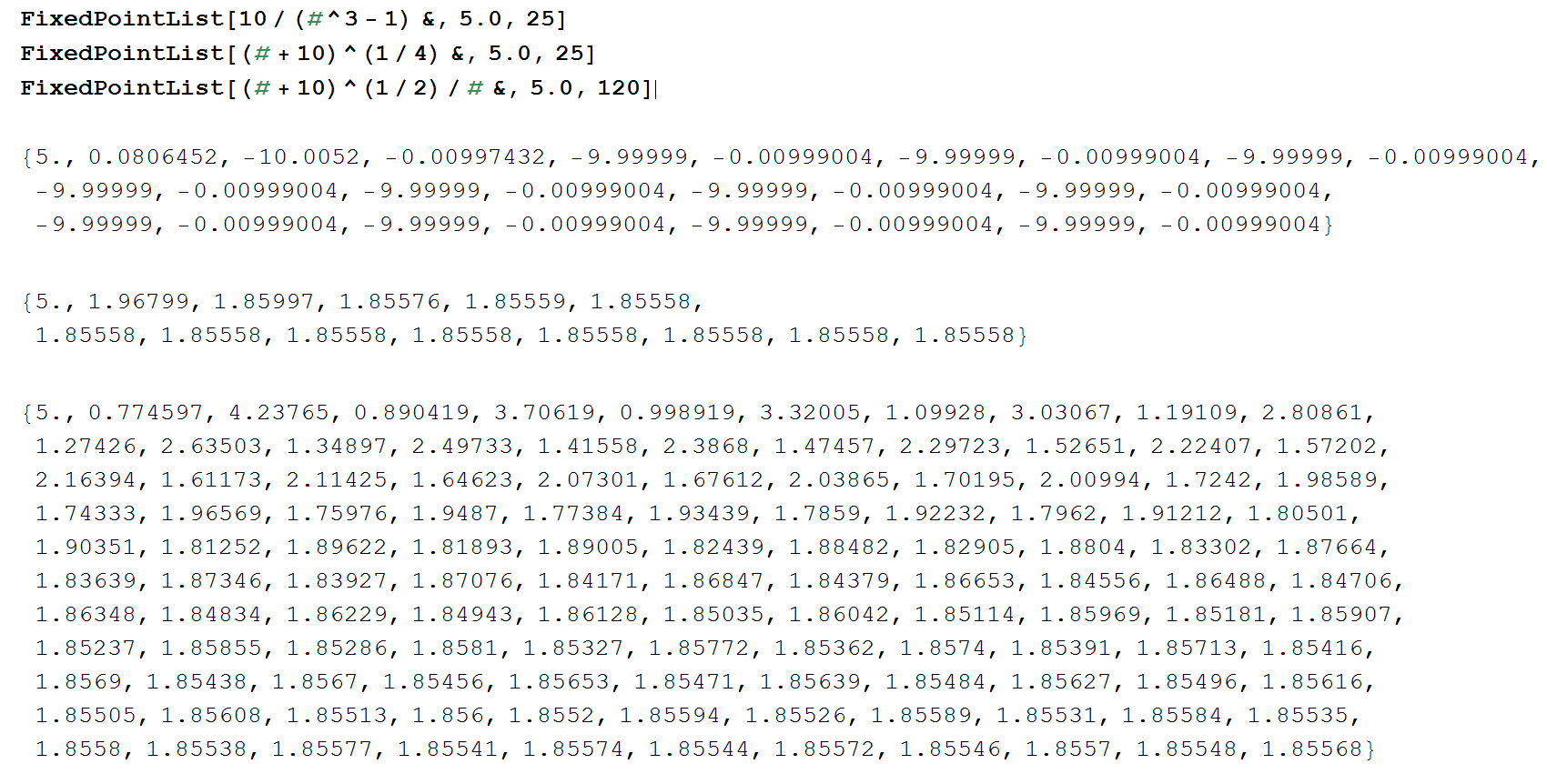

The following shows the output if we use the built-in fixed-point iteration function for each of ![]() ,

, ![]() , and

, and ![]() .

. ![]() oscillates and so, it will never converge.

oscillates and so, it will never converge. ![]() converges really fast (3 to 4 iterations).

converges really fast (3 to 4 iterations). ![]() converges really slow, taking up to 120 iterations to converge.

converges really slow, taking up to 120 iterations to converge.

Newton-Raphson Method

The Newton-Raphson method is one of the most used methods of all root-finding methods. The reason for its success is that it converges very fast in most cases. In addition, it can be extended quite easily to multi-variable equations. To find the root of the equation ![]() , the Newton-Raphson method depends on the Taylor Series Expansion of the function around the estimate

, the Newton-Raphson method depends on the Taylor Series Expansion of the function around the estimate ![]() to find a better estimate

to find a better estimate ![]() :

:

![]()

where ![]() is the estimate of the root after iteration

is the estimate of the root after iteration ![]() and

and ![]() is the estimate at iteration

is the estimate at iteration ![]() . Assuming

. Assuming ![]() and rearranging:

and rearranging:

![]()

The procedure is as follows. Setting an initial guess ![]() , tolerance

, tolerance ![]() , and maximum number of iterations

, and maximum number of iterations ![]() :

:

At iteration ![]() , calculate

, calculate ![]() and

and ![]() . If

. If ![]() or if

or if ![]() , stop the procedure. Otherwise repeat.

, stop the procedure. Otherwise repeat.

Note: unlike the previous methods, the Newton-Raphson method relies on calculating the first derivative of the function ![]() . This makes the procedure very fast, however, it has two disadvantages. The first is that this procedure doesn’t work if the function

. This makes the procedure very fast, however, it has two disadvantages. The first is that this procedure doesn’t work if the function ![]() is not differentiable. Second, the inverse

is not differentiable. Second, the inverse ![]() can be slow to calculate when dealing with multi-variable equations.

can be slow to calculate when dealing with multi-variable equations.

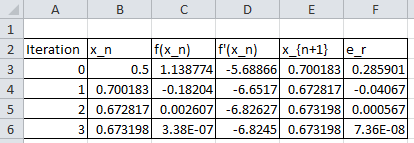

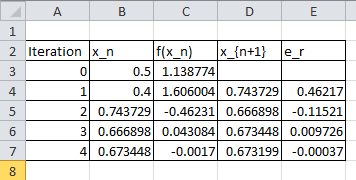

Example

As an example, let’s consider the function ![]() . The derivative of

. The derivative of ![]() is

is ![]() . Setting the maximum number of iterations

. Setting the maximum number of iterations ![]() ,

, ![]() ,

, ![]() , the following is the Microsoft Excel table produced:

, the following is the Microsoft Excel table produced:

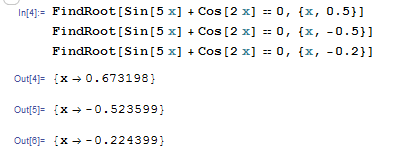

Mathematica has a built-in algorithm for the Newton-Raphson method. The function “FindRoot[lhs==rhs,{x,x0}]” applies the Newton-Raphson method with the initial guess being “x0”. The following is a screenshot of the input and output of the built-in function evaluating the roots based on three initial guesses.

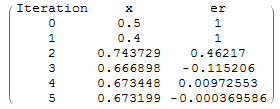

Alternatively, a Mathematica code can be written to implement the Newton-Raphson method with the following output for three different initial guesses:

View Mathematica Code

Clear[x]

f[x_] := Sin[5 x] + Cos[2 x]

fp = D[f[x], x]

xtable = {-0.25};

er = {1};

es = 0.0005;

MaxIter = 100;

i = 1;

While[And[i <= MaxIter, Abs[er[[i]]] > es], xnew = xtable[[i]] - (f[xtable[[i]]]/fp /. x -> xtable[[i]]); xtable = Append[xtable, xnew]; ernew = (xnew - xtable[[i]])/xnew; er = Append[er, ernew]; i++];

T = Length[xtable];

SolutionTable = Table[{i - 1, xtable[[i]], er[[i]]}, {i, 1, T}];

SolutionTable1 = {"Iteration", "x", "er"};

T = Prepend[SolutionTable, SolutionTable1];

T // MatrixForm

The following MATLAB code runs the Newton-Raphson method to find the root of a function ![]() with derivative

with derivative ![]() and initial guess

and initial guess ![]() . The value of the estimate and approximate relative error at each iteration is displayed in the command window. Additionally, two plots are produced to visualize how the iterations and the errors progress. You need to pass the function f(x) and its derivative df(x) to the newton-raphson method along with the initial guess as newtonraphson(@(x) f(x), @(x) df(x), x0). For example, try newtonraphson(@(x) sin(5.*x)+cos(2.*x), @(x) 5.*cos(5.*x)-2.*sin(2.*x),0.4)

. The value of the estimate and approximate relative error at each iteration is displayed in the command window. Additionally, two plots are produced to visualize how the iterations and the errors progress. You need to pass the function f(x) and its derivative df(x) to the newton-raphson method along with the initial guess as newtonraphson(@(x) f(x), @(x) df(x), x0). For example, try newtonraphson(@(x) sin(5.*x)+cos(2.*x), @(x) 5.*cos(5.*x)-2.*sin(2.*x),0.4)

Analysis of Convergence of the Newton-Raphson Method

The error in the Newton-Raphson Method can be roughly estimated as follows. The estimate ![]() is related to the previous estimate

is related to the previous estimate ![]() using the equation:

using the equation:

![]()

Additionally, using Taylor’s theorem, and if ![]() is the true root with

is the true root with ![]() we have:

we have:

![]()

for some ![]() in the interval between

in the interval between ![]() and

and ![]() . Subtracting the above two equations yields:

. Subtracting the above two equations yields:

![]()

(1) ![]()

If the method is converging, we have ![]() and

and ![]() therefore:

therefore:

![]()

Therefore, the error is squared after each iteration, i.e., the number of correct decimal places approximately doubles with each iteration. This behaviour is called quadratic convergence. Look at the tables in the Newton-Raphson example above and compare the relative error after each step!

The following tool can be used to visualize how the Newton-Raphson method works. Using the slope ![]() , the difference

, the difference ![]() can be calculated and thus, the value of the new estimate

can be calculated and thus, the value of the new estimate ![]() can be computed accordingly. Use the slider to see how fast the method converges to the true solution using

can be computed accordingly. Use the slider to see how fast the method converges to the true solution using ![]() ,

, ![]() , and solving for the root of

, and solving for the root of ![]() .

.

Manipulate[

f[x_] := Sin[5 x] + Cos[2 x];

fp = D[f[x], x];

xtable = {0.4};

er = {1};

es = 0.0005;

MaxIter = 100;

i = 1;

While[And[i <= MaxIter, Abs[er[[i]]] > es], xnew = xtable[[i]] - (f[xtable[[i]]]/fp /. x -> xtable[[i]]); xtable = Append[xtable, xnew]; ernew = (xnew - xtable[[i]])/xnew; er = Append[er, ernew]; i++];

T = Length[xtable];

SolutionTable = Table[{i - 1, xtable[[i]], er[[i]]}, {i, 1, T}];

SolutionTable1 = {"Iteration", "x", "er"};

T = Prepend[SolutionTable, SolutionTable1];

LineTable1 = Table[{{T[[i, 2]], 0}, {T[[i, 2]], f[T[[i, 2]]]}, {T[[i + 1, 2]],0}}, {i, 2, n - 1}];

Grid[{{Plot[f[x], {x, 0, 1}, PlotLegends -> {"f(x)"}, ImageSize -> Medium, Epilog -> {Dashed, Line[LineTable1]}]}, {Row[{"Iteration=", n - 2, " x_n=", T[[n, 2]], " f(x_n)=", f[T[[n, 2]]]}]}}], {n, 2, 7, 1}]

Depending on the shape of the function and the initial guess, the Newton-Raphson method can get stuck around the locations of oscillations of the function. The tool below visualizes the algorithm when trying to find the root of ![]() with an initial guess of

with an initial guess of ![]() . It takes 33 iterations before reaching convergence.

. It takes 33 iterations before reaching convergence.

Depending on the shape of the function and the initial guess, the Newton-Raphson method can get stuck in a loop. The tool below visualizes the algorithm when trying to find the root of ![]() with an initial guess of

with an initial guess of ![]() . The algorithm goes into an infinite loop.

. The algorithm goes into an infinite loop.

In general, an initial guess that is close enough to the true root will guarantee quick convergence. The tool below visualizes the algorithm when trying to find the root of ![]() with an initial guess of

with an initial guess of ![]() . The algorithm quickly converges to the desired root.

. The algorithm quickly converges to the desired root.

The following is the Mathematica code used to generate one of the tools above:

View Mathematica Code

Manipulate[

f[x_] := x^3 - x + 3;

fp = D[f[x], x];

xtable = {-0.1};

er = {1.};

es = 0.0005;

MaxIter = 100;

i = 1;

While[And[i <= MaxIter, Abs[er[[i]]] > es], xnew = xtable[[i]] - (f[xtable[[i]]]/fp /. x -> xtable[[i]]); xtable = Append[xtable, xnew]; ernew = (xnew - xtable[[i]])/xnew; er = Append[er, ernew]; i++];

T = Length[xtable];

SolutionTable = Table[{i - 1, xtable[[i]], er[[i]]}, {i, 1, T}];

SolutionTable1 = {"Iteration", "x", "er"};

T = Prepend[SolutionTable, SolutionTable1];

LineTable1 = Table[{{T[[i, 2]], 0}, {T[[i, 2]], f[T[[i, 2]]]}, {T[[i + 1, 2]], 0}}, {i, 2, n - 1}];

Grid[{{Plot[f[x], {x, -3, 2}, PlotLegends -> {"f(x)"}, ImageSize -> Medium, Epilog -> {Dashed, Line[LineTable1]}]}, {Row[{"Iteration=", n - 2, " x_n=", T[[n, 2]], " f(x_n)=", f[T[[n, 2]]]}]}}] , {n, 2, 33, 1}]

Secant Method

The secant method is an alternative to the Newton-Raphson method by replacing the derivative ![]() with its finite-difference approximation. The secant method thus does not require the use of derivatives especially when

with its finite-difference approximation. The secant method thus does not require the use of derivatives especially when ![]() is not explicitly defined. In certain situations, the secant method is preferable over the Newton-Raphson method even though its rate of convergence is slightly less than that of the Newton-Raphson method. Consider the problem of finding the root of the function

is not explicitly defined. In certain situations, the secant method is preferable over the Newton-Raphson method even though its rate of convergence is slightly less than that of the Newton-Raphson method. Consider the problem of finding the root of the function ![]() . Starting with the Newton-Raphson equation and utilizing the following approximation for the derivative

. Starting with the Newton-Raphson equation and utilizing the following approximation for the derivative ![]() :

:

![]()

the estimate for iteration ![]() can be computed as:

can be computed as:

![]()

Obviously, the secant method requires two initial guesses ![]() and

and ![]() .

.

Example

As an example, let’s consider the function ![]() . Setting the maximum number of iterations

. Setting the maximum number of iterations ![]() ,

, ![]() ,

, ![]() , and

, and ![]() , the following is the Microsoft Excel table produced:

, the following is the Microsoft Excel table produced:

The Mathematica code below can be used to program the secant method with the following output:

f[x_] := Sin[5 x] + Cos[2 x];

xtable = {0.5, 0.4};

er = {1,1};

es = 0.0005;

MaxIter = 100;

i = 2;

While[And[i <= MaxIter, Abs[er[[i]]] > es], xnew = xtable[[i]] - (f[xtable[[i]]]) (xtable[[i]] - xtable[[i - 1]])/(f[xtable[[i]]] -f[xtable[[i - 1]]]); xtable = Append[xtable, xnew]; ernew = (xnew - xtable[[i]])/xnew; er = Append[er, ernew]; i++];

T = Length[xtable];

SolutionTable = Table[{i - 1, xtable[[i]], er[[i]]}, {i, 1, T}];

SolutionTable1 = {"Iteration", "x", "er"};

T = Prepend[SolutionTable, SolutionTable1];

T // MatrixForm

The following code runs the Secant method to find the root of a function ![]() with two initial guesses

with two initial guesses ![]() and

and ![]() . The value of the estimate and approximate relative error at each iteration is displayed in the command window. Additionally, two plots are produced to visualize how the iterations and the errors progress. The program waits for a keypress between each iteration to allow you to visualize the iterations in the figure. Call the function with secant(@(x) f(x), x0, x1). For example try secant(@(x) sin(5.*x)+cos(2.*x),0.5,0.4)

. The value of the estimate and approximate relative error at each iteration is displayed in the command window. Additionally, two plots are produced to visualize how the iterations and the errors progress. The program waits for a keypress between each iteration to allow you to visualize the iterations in the figure. Call the function with secant(@(x) f(x), x0, x1). For example try secant(@(x) sin(5.*x)+cos(2.*x),0.5,0.4)

Convergence Analysis of the Secant Method

The ![]() estimate in the secant method is obtained as follows:

estimate in the secant method is obtained as follows:

![]()

Multiplying both sides by -1 and adding the true value of the root ![]() where

where ![]() for both sides yields:

for both sides yields:

![]()

Using algebraic manipulations:

![Rendered by QuickLaTeX.com \[ \begin{split} x_t-x_{i+1}&=\frac{(x_t-x_i)(f(x_i)-f(x_{i-1}))+f(x_i)(x_i-x_{i-1})}{f(x_i)-f(x_{i-1})}\\ &=\frac{f(x_i)(x_t-x_{i-1})-f(x_{i-1})(x_t-x_i)}{f(x_i)-f(x_{i-1})}\\ &=(x_t-x_{i-1})(x_t-x_i)\frac{\frac{f(x_i)}{x_t-x_i}-\frac{f(x_{i-1})}{x_t-x_{i-1}}}{f(x_i)-f(x_{i-1})} \end{split} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-e9e56f81563b88b0e12826549f008699_l3.png)

Using the Mean Value Theorem, the denominator on the right-hand side can be replaced with:

![]()

for some ![]() between

between ![]() and

and ![]() . Therefore,

. Therefore,

![]()

Using Taylor’s theorem for ![]() and

and ![]() around

around ![]() we get:

we get:

![Rendered by QuickLaTeX.com \[\begin{split} \frac{f(x_i)}{x_t-x_i}=f'(x_t)+\frac{f''(\xi_1)}{2}{(x_t-x_i)}\\ \frac{f(x_i)}{x_t-x_{i-1}}=f'(x_t)+\frac{f''(\xi_2)}{2}{(x_t-x_{i-1})} \end{split} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-6775b5eb8381df36f43839b13b044c66_l3.png)

for some ![]() between

between ![]() and

and ![]() and some

and some ![]() between

between ![]() and

and ![]() . Using the above expressions we can reach the equation:

. Using the above expressions we can reach the equation:

![]()

![]() and

and ![]() can be assumed to be identical and equal to

can be assumed to be identical and equal to ![]() , therefore:

, therefore:

![Rendered by QuickLaTeX.com \[\begin{split} x_t-x_{i+1}&=(x_t-x_{i-1})(x_t-x_i)\frac{\frac{f''(\xi)}{2}{(x_t-x_i-x_t+x_{i-1})}}{f'(\zeta)(x_i-x_{i-1})}\\ &=(x_t-x_{i-1})(x_t-x_i)\frac{-f''(\xi)}{2f'(\zeta)} \end{split} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-83b9edce2f74284e04719ee652fa6041_l3.png)

(2) ![]()

Comparing 1 with 2 shows that the convergence in the secant method is not quite quadratic. To find the order of convergence, we need to solve the following equation for a positive ![]() and

and ![]() :

:

![]()

Substituting into equation 2 yields:

![]()

But we also have:

![]()

Therefore: ![]() . This equation is called the golden ratio and has the positive solution for

. This equation is called the golden ratio and has the positive solution for ![]() :

:

![]()

while

![]()

implying that the error convergence is not quadratic but rather:

![]()

The following tool visualizes how the secant method converges to the true solution using two initial guesses. Using ![]() ,

, ![]() ,

, ![]() , and solving for the root of

, and solving for the root of ![]() yields

yields ![]() .

.

The following Mathematica Code was utilized to produce the above tool:

View Mathematica Code

Manipulate[

f[x_] := x^3 - x + 3;

f[x_] := Sin[5 x] + Cos[2 x];

xtable = {-0.5, -0.6};

xtable = {0.35, 0.4};

er = {1, 1};

es = 0.0005;

MaxIter = 100;

i = 2;

While[And[i <= MaxIter, Abs[er[[i]]] > es], xnew = xtable[[i]] - (f[xtable[[i]]]) (xtable[[i]] - xtable[[i-1]])/(f[xtable[[i]]] -f[xtable[[i - 1]]]); xtable = Append[xtable, xnew]; ernew = (xnew - xtable[[i]])/xnew; er = Append[er, ernew]; i++];

T = Length[xtable];

SolutionTable = Table[{i - 1, xtable[[i]], er[[i]]}, {i, 1, T}];

SolutionTable1 = {"Iteration", "x", "er"};

T = Prepend[SolutionTable, SolutionTable1];

T // MatrixForm;

If[n > 3,

(LineTable1 = {{T[[n - 2, 2]], 0}, {T[[n - 2, 2]], f[T[[n - 2, 2]]]}, {T[[n - 1, 2]], f[T[[n - 1, 2]]]}, {T[[n, 2]], 0}};

LineTable2 = {{T[[n - 1, 2]], 0}, {T[[n - 1, 2]], f[T[[n - 1, 2]]]}}), LineTable1 = {{}}; LineTable2 = {{}}];

Grid[{{Plot[f[x], {x, 0, 1}, PlotLegends -> {"f(x)"}, ImageSize -> Medium, Epilog -> {Dashed, Line[LineTable1], Line[LineTable2]}]}, {Row[{"Iteration=", n - 2, " x_n=", T[[n, 2]], " f(x_n)=", f[T[[n, 2]]]}]}}], {n, 2, 8, 1}]

Problems

- Let

![Rendered by QuickLaTeX.com f:[-5,5]\rightarrow \mathbb{R}](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-973fb8d83043d95cb49ed34364c43946_l3.png) be such that

be such that  . Use the graphical method to find the number and estimates of the roots of each of the equations

. Use the graphical method to find the number and estimates of the roots of each of the equations  and

and  .

. - Compare the bisection method and the false position method in finding the roots of the two equations

and

and  in the interval

in the interval ![Rendered by QuickLaTeX.com [-1,1]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-4a7430a5619e08a23c430423c1376967_l3.png) where

where  is:

is:

![Rendered by QuickLaTeX.com \[ f(x)=2x^3-4x^2+3x \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-c76ad1b9a66895a53e90bb9cf6b91e6f_l3.png)

Compare with the result using the Solve function and comment on the use of

as a measure of the relative error.

as a measure of the relative error. - Locate the first nontrivial root of

where

where  is in radians. Use the graphical method and the bisection method with

is in radians. Use the graphical method and the bisection method with  .

. - Water is flowing in a trapezoidal channel at a rate of

. The critical depth

. The critical depth  for such a channel must satisfy the equation:

for such a channel must satisfy the equation:

![Rendered by QuickLaTeX.com \[ 1-\frac{Q^2}{g A_c^3}B=0 \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-eeabb71db66495ea6152fa6e402c89b7_l3.png)

where

,

,  is the cross sectional area (

is the cross sectional area ( ), and

), and  is the width of the channel at the surface (

is the width of the channel at the surface ( ). Assuming that the width and the cross‐sectional area can be related to the depth

). Assuming that the width and the cross‐sectional area can be related to the depth  by:

by:![Rendered by QuickLaTeX.com \[\begin{split} B&=3+y\\ A_c&=3y+\frac{y^2}{2} \end{split} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-d34ef9428c58f24dcb1ff3dc3a9e1362_l3.png)

Solve for the critical depth using the graphical method, the bisection method, and the false position method to find the critical depth in the interval

![Rendered by QuickLaTeX.com [0,3]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-6abe4233622b81d2a4a66682455d476b_l3.png) . Use

. Use  . Discuss your results (difference in the methods, accuracy, number of iterations, etc.).

. Discuss your results (difference in the methods, accuracy, number of iterations, etc.).(Note: The critical depth is the depth below which the flow of the fluid becomes relatively fast and affected by the upstream conditions (supercritical flow). Above that depth, the flow is subcritical, relatively slow and is controlled by the downstream conditions)

- Use the graphical method to find all real roots of the equation

![Rendered by QuickLaTeX.com \[ f(x)=-14-20x+19x^2-3x^3 \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-b57f446d63fbe067443facbf08f986c7_l3.png)

You are required to

- Plot the function several times by using smaller ranges for the

axis to obtain the roots up to 3 significant digits.

axis to obtain the roots up to 3 significant digits. - Explain the steps you have taken to identify the number and estimates of the roots.

- Explain the steps you have taken to obtain the roots to the required accuracy.

- Compare your results to the solution obtained using the “Solve” function in Mathematica.

- Plot the function several times by using smaller ranges for the

- Use the bisection method and the false position method to find all real roots of the equation

![Rendered by QuickLaTeX.com \[ f(x)=-14-20x+19x^2-3x^3 \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-b57f446d63fbe067443facbf08f986c7_l3.png)

Use

.

. - Consider the function:

![Rendered by QuickLaTeX.com \[ f(x)= -0.9 x^2+1.7x+2.5 \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-16e0d3edff8681c02c4457ca62342049_l3.png)

Determine a root for the expression

- using fixed-point iteration

- using the Newton-Raphson method

Use an initial guess of

and

and  . Compare your final answer with the Solve function in Mathematica.

. Compare your final answer with the Solve function in Mathematica. - Consider the function:

![Rendered by QuickLaTeX.com \[ f(x)=7\sin{(x)} e^{-x}-1 \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-bb9950dec77cd3b8db689b8a5666b1ab_l3.png)

Find the lowest positive root for the expression

- graphically

- using the Newton-Raphson method

- using the secant method

- Use the Newton-Raphson method to find the root of:

![Rendered by QuickLaTeX.com \[ f(x)=e^{-0.5x}(4-x)-2=0 \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-153363f520cae79fff15038f56dbefa1_l3.png)

Employ initial guesses of 2, 6, and 8. Explain your results.

- The Manning equation can be written for a rectangular open channel as:

![Rendered by QuickLaTeX.com \[ Q=\frac{\sqrt{S}(BH)^\frac{5}{3}}{n(B+2H)^{\frac{2}{3}}} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-b3932f346be59b2c4a726230af37f238_l3.png)

where

is the fluid flow in

is the fluid flow in  ,

,  is the slope in

is the slope in  ,

,  is the depth in

is the depth in  , and

, and  is the Manning roughness coefficient. Develop a fixed-point iteration scheme to solve this equation for

is the Manning roughness coefficient. Develop a fixed-point iteration scheme to solve this equation for  given

given  ,

,  ,

,  , and

, and  . Consider

. Consider  . Prove that your scheme converges for all initial guesses greater than or equal to zero.

. Prove that your scheme converges for all initial guesses greater than or equal to zero. - Why does the Babylonian method almost always converge?

- Consider the function

![Rendered by QuickLaTeX.com \[ f(x)=e^{-x}-x \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-b6a4b3a68bcf3bfdd2347e0b00ef5df8_l3.png)

Compare the secant method and the Newton-Raphson method in finding the root of the equation

.

. - Solve the previous question using the Fixed-Point Iteration method

- Use the Fixed-Point Iteration method to find the roots of the following functions:

![Rendered by QuickLaTeX.com \[ f_1(x)=1-\frac{x^2}{4} \quad f_2(x)=2\sqrt{x-1}-x \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-e9ef3508a7c9601303c2f4b7118dd5e7_l3.png)