Displacement and Strain: The Deformation Gradient

Definitions:

For a general 3D deformation of an object, local strains can be measured by comparing the “length” between two neighbouring points before and after deformation. Thus, we are interested in tracking lines or curves on the reference and deformed configuration. The restrictions on the possible position functions ![]() (especially being differentiable) allow such comparisons and calculation as follows:

(especially being differentiable) allow such comparisons and calculation as follows:

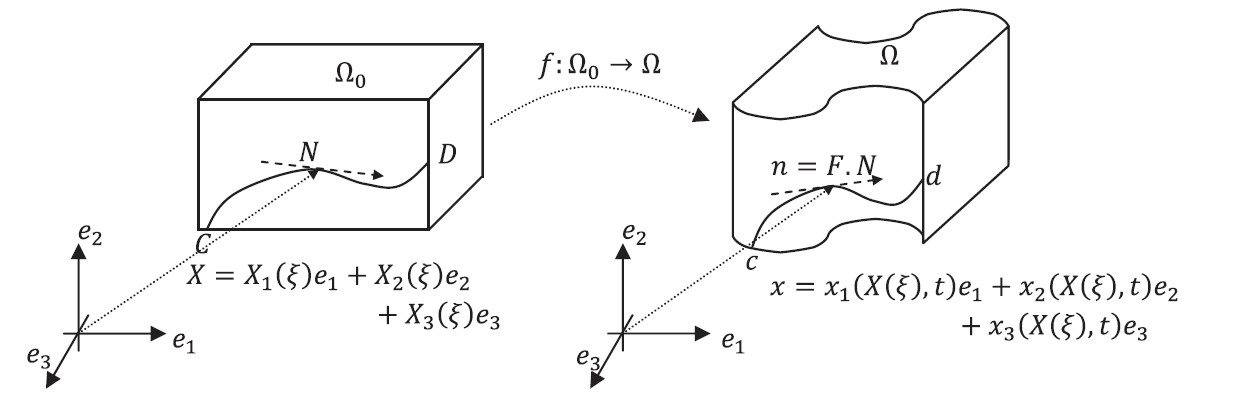

We first assume a material curve inside the reference and deformed configurations by assuming a parameter ![]() that defines the curve (Figure 1). The position in the reference and deformed configurations respectively are given by

that defines the curve (Figure 1). The position in the reference and deformed configurations respectively are given by ![]() and

and ![]() where

where ![]() refers to time:

refers to time:

![Rendered by QuickLaTeX.com \[ X= \left(\begin{array}{c} X_1(\xi)\\ X_2(\xi)\\X_3(\xi) \end{array}\right)\qquad x= \left(\begin{array}{c} x_1(\xi,t)\\ x_2(\xi,t)\\x_3(\xi,t) \end{array}\right) \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-1b3ba6e6be89626c7356bc6879883c47_l3.png)

The tangents to the material curves at a point given by a particular value for the parameter ![]() in the reference and deformed configurations are denoted

in the reference and deformed configurations are denoted ![]() and

and ![]() respectively (Figure 1) and given by:

respectively (Figure 1) and given by:

![Rendered by QuickLaTeX.com \[ N=\frac{\partial X}{\partial \xi}= \left(\begin{array}{c} \frac{\partial X_1}{\partial \xi}\\ \frac{\partial X_2}{\partial \xi}\\\frac{\partial X_3}{\partial \xi} \end{array}\right)\qquad n=\frac{\partial x}{\partial \xi}=\frac{\partial x}{\partial X}\frac{\partial X}{\partial \xi}= \left(\begin{array}{ccc} \frac{\partial x_1}{\partial X_1} & \frac{\partial x_1}{\partial X_2} & \frac{\partial x_1}{\partial X_3}\\ \frac{\partial x_2}{\partial X_1} & \frac{\partial x_2}{\partial X_2} & \frac{\partial x_2}{\partial X_3}\\ \frac{\partial x_3}{\partial X_1} & \frac{\partial x_3}{\partial X_2} & \frac{\partial x_3}{\partial X_3} \end{array}\right) \left(\begin{array}{c} \frac{\partial X_1}{\partial \xi}\\ \frac{\partial X_2}{\partial \xi}\\\frac{\partial X_3}{\partial \xi} \end{array}\right) \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-17891048c24bbae3d932859d8825afef_l3.png)

The matrix ![]() :

:

![Rendered by QuickLaTeX.com \[ F=\left(\begin{array}{ccc} \frac{\partial x_1}{\partial X_1} & \frac{\partial x_1}{\partial X_2} & \frac{\partial x_1}{\partial X_3}\\ \frac{\partial x_2}{\partial X_1} & \frac{\partial x_2}{\partial X_2} & \frac{\partial x_2}{\partial X_3}\\ \frac{\partial x_3}{\partial X_1} & \frac{\partial x_3}{\partial X_2} & \frac{\partial x_3}{\partial X_3} \end{array}\right) \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-abc4f0bd603602a27f326017985ec020_l3.png)

is denoted the “Deformation Gradient” and contains all the required local information about the changes in length, volumes and angles due to the deformation as follows:

- A tangent vector

in the reference configuration is deformed into the tangent vector

in the reference configuration is deformed into the tangent vector  . The two vectors are related using the deformation gradient tensor

. The two vectors are related using the deformation gradient tensor  :

:

![Rendered by QuickLaTeX.com \[ n = FN \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-186f3b7b451b2889fba3378b735d1512_l3.png)

- The ratio between the local volume of the deformed configuration to the local volume in the reference configuration is equal to the determinant of

.

. - If an infinitesimal area vector is termed

and

and  in the deformed and reference configurations respectively, with

in the deformed and reference configurations respectively, with  and

and  in

in  being the magnitudes of the area while

being the magnitudes of the area while  and

and  are the unit vectors perpendicular to the corresponding areas, then using Nanson’s formula shown in the section on the determinant of matrices

are the unit vectors perpendicular to the corresponding areas, then using Nanson’s formula shown in the section on the determinant of matrices  , the relationship between them is given by:

, the relationship between them is given by:

![Rendered by QuickLaTeX.com \[ (da) n = \det(F)(dA)F^{-T}N \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-1e0699f4c0ada8419ec0f39d1088f320_l3.png)

- An isochoric deformation is a deformation preserving local volume, i.e.,

at every point.

at every point. - A deformation is called homogeneous if

is constant at every point, i.e.,

is constant at every point, i.e.,  is not a function of position. Otherwise, the deformation is called non-homogeneous.

is not a function of position. Otherwise, the deformation is called non-homogeneous. - The physical restrictions of possible deformations force the

to be always positive. (why?)

to be always positive. (why?)

Figure 1. Tangents to material curves in the reference and deformed configurations. Points ![]() and

and ![]() in the reference configuration correspond to points

in the reference configuration correspond to points ![]() and

and ![]() in the deformed configuration.

in the deformed configuration.

The Polar Decomposition of the Deformation Gradient:

One of the general results of linear algebra is the Polar Decomposition of matrices which states the following. Any matrix of real numbers ![]() can be decomposed into two matrices multiplied by each other

can be decomposed into two matrices multiplied by each other ![]() such that

such that ![]() is an orthogonal matrix and

is an orthogonal matrix and ![]() is a semi-positive definite symmetric matrix. In particular, if

is a semi-positive definite symmetric matrix. In particular, if ![]() , then we can find a rotation matrices

, then we can find a rotation matrices ![]() and two positive-definite symmetric matrices

and two positive-definite symmetric matrices ![]() and

and ![]() such that

such that

![]()

For continuum mechanics applications, ![]() and

and ![]() are termed the right and left stretch tensors respectively. The first equality is termed the “right” polar decomposition while the second is called the “left”. The proof of the above statement presented here is based on the two books:

are termed the right and left stretch tensors respectively. The first equality is termed the “right” polar decomposition while the second is called the “left”. The proof of the above statement presented here is based on the two books:

- Ciarlet, P.G. (2004). Mathematical Elasticity. Volume 1: Three Dimensional Elasticity. Elsevier Ltd.

- Chadwick, P. (1999). Continuum Mechanics. Concise Theory and Problems. Dover Publications Inc.

and it will be presented using several statements.

Statement 1:

Let ![]() be such that

be such that ![]() . Then, the matrices

. Then, the matrices ![]() and

and ![]() are positive definite symmetric matrices.

are positive definite symmetric matrices.

Proof:

The symmetry of ![]() and

and ![]() is straightforward as follows:

is straightforward as follows:

![]()

Also, since ![]() , therefore:

, therefore: ![]() (i.e.,

(i.e., ![]() ) we have

) we have ![]() . Therefore,

. Therefore, ![]() . Therefore,

. Therefore, ![]() is positive definite.

is positive definite.

Similarly, it can be shown that ![]() is positive definite.

is positive definite.

![]()

Statement 2:

Let ![]() be such that

be such that ![]() is a positive definite symmetric matrix. Show that there exists a unique square root positive-definite symmetric matrix for

is a positive definite symmetric matrix. Show that there exists a unique square root positive-definite symmetric matrix for ![]() (denoted by

(denoted by ![]() such that

such that ![]() .

.

Proof:

The existence of a square root is straightforward. As per the results in the symmetric tensors section, we can choose a coordinate system such that ![]() is diagonal with three positive real numbers

is diagonal with three positive real numbers ![]() and

and ![]() in the diagonal:

in the diagonal:

![Rendered by QuickLaTeX.com \[ M=\left(\begin{array}{ccc} M_1 & 0 & 0\\ 0 & M_2 & 0\\ 0 & 0 & M_3 \end{array} \right) \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-aaf9497241ab17bcbcf3c1ae2f621c27_l3.png)

By setting ![]() and

and ![]() then:

then:

![Rendered by QuickLaTeX.com \[ M^{1/2}=\left(\begin{array}{ccc} \lambda_1 & 0 & 0\\ 0 & \lambda_2 & 0\\ 0 & 0 & \lambda_3 \end{array} \right) \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-0a69f61c75dc05fbc94a0913482e36b1_l3.png)

and it is straightforward to show that: ![]() in any coordinate system.

in any coordinate system.

The uniqueness of ![]() can be shown using contradiction by assuming that there are two different positive definite square roots

can be shown using contradiction by assuming that there are two different positive definite square roots ![]() and

and ![]() such that

such that

![]()

![]() is a positive-definite symmetric matrix with positive eigenvalue

is a positive-definite symmetric matrix with positive eigenvalue ![]() and

and ![]() . Let

. Let ![]() be the eigenvector associated with

be the eigenvector associated with ![]() , therefore:

, therefore:

![]()

Since by assumption, ![]() is positive definite, therefore

is positive definite, therefore ![]() is an eigenvalue of

is an eigenvalue of ![]() associated with the eigenvector

associated with the eigenvector ![]() ; otherwise,

; otherwise, ![]() is an eigenvalue of

is an eigenvalue of ![]() which contradicts that it is positive definite. This applies to

which contradicts that it is positive definite. This applies to ![]() as well and therefore,

as well and therefore, ![]() and

and ![]() are positive definite symmetric matrices that share the same eigenvalues and eigenvectors, therefore, they are identical (why?).

are positive definite symmetric matrices that share the same eigenvalues and eigenvectors, therefore, they are identical (why?).

![]()

Notice that a positive definite symmetric matrix can have various “square roots”, however, there is only one unique square root that is also a positive definite symmetric matrix. For example, consider the matrix:

![Rendered by QuickLaTeX.com \[ M=\left(\begin{array}{ccc} 4 & 0 & 0\\ 0 & 4 & 0\\ 0 & 0 & 1 \end{array} \right) \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-28c953a29212a0ead0250d3561ea5871_l3.png)

The unique positive definite symmetric square root of ![]() is:

is:

![Rendered by QuickLaTeX.com \[ M^{1/2}=\left(\begin{array}{ccc} 2 & 0 & 0\\ 0 & 2 & 0\\ 0 & 0 & 1 \end{array} \right) \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-1e070536437d1a3b75d3b8c9bee6702c_l3.png)

However, the following symmetric matrix ![]() is also a square root of

is also a square root of ![]() , nevertheless, it is NOT positive-definite:

, nevertheless, it is NOT positive-definite:

![Rendered by QuickLaTeX.com \[ A=\sqrt{2}\left(\begin{array}{ccc} 1 & 1 & 0\\ 1 & -1 & 0\\ 0 & 0 & 1/\sqrt{2} \end{array} \right) \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-0d495d0f7b9fdb9873769e0407d5fb1a_l3.png)

Verify that ![]() and

and ![]() is NOT positive-definite.

is NOT positive-definite.

Statement 3:

Let ![]() be such that

be such that ![]() . Show that there exists

. Show that there exists ![]() can be uniquely decomposed into:

can be uniquely decomposed into:

![]()

where, ![]() and

and ![]() are positive-definite symmetric matrices while

are positive-definite symmetric matrices while ![]() is a rotation matrix.

is a rotation matrix.

Proof:

From the statements 1 and 2 above, ![]() and

and ![]() are unique positive definite symmetric matrices, therefore, they are invertible (why?). Let

are unique positive definite symmetric matrices, therefore, they are invertible (why?). Let

![]()

Both ![]() and

and ![]() are invertible, (why?). In addition:

are invertible, (why?). In addition:

![]()

and

![]()

We also have: ![]() and

and ![]() , therefore,

, therefore, ![]() . Therefore,

. Therefore, ![]() . Similarly,

. Similarly, ![]() . Therefore, both,

. Therefore, both, ![]() and

and ![]() are rotation matrices.

are rotation matrices.

Since ![]() and

and ![]() are invertible and unique,

are invertible and unique, ![]() and

and ![]() are also unique. We can show this by contradiction, by assuming that:

are also unique. We can show this by contradiction, by assuming that:

![]()

Therefore:

![]()

The same argument applies for ![]() .

.

Finally, it is required to show that ![]() , indeed:

, indeed:

![]()

However, ![]() is a positive definite symmetric matrix (why?), and since the decomposition

is a positive definite symmetric matrix (why?), and since the decomposition ![]() is unique, therefore,

is unique, therefore,

![]()

![]()

Statement 4:

![]() and

and ![]() share the same eigenvalues and their eigenvectors differ by the rotation

share the same eigenvalues and their eigenvectors differ by the rotation ![]()

Proof:

Assuming that ![]() is an eigenvalue of

is an eigenvalue of ![]() with the corresponding eigenvector

with the corresponding eigenvector ![]() , then:

, then:

![]()

Therefore, ![]() is an eigenvalue of

is an eigenvalue of ![]() while

while ![]() is the associated eigenvector.

is the associated eigenvector.

Similarly, if ![]() is an eigenvalue of

is an eigenvalue of ![]() with the corresponding eigenvector

with the corresponding eigenvector ![]() , then:

, then:

![]()

Therefore, ![]() is an eigenvalue of

is an eigenvalue of ![]() while

while ![]() is the associated eigenvector.

is the associated eigenvector.

Assuming that ![]() ,

, ![]() and

and ![]() are the eigenvalues of

are the eigenvalues of ![]() and

and ![]() ,

, ![]() ,

, ![]() , and

, and ![]() are the associated eigenvectors of

are the associated eigenvectors of ![]() , while

, while ![]() ,

, ![]() , and

, and ![]() are the associated eigenvectors of

are the associated eigenvectors of ![]() , then

, then ![]() and

and ![]() admit the representations (see the section about the representation of symmetric matrices):

admit the representations (see the section about the representation of symmetric matrices):

![]()

![]()

and ![]() :

:

![]()

![]()

Physical Interpretation:

The unique decomposition of the deformation gradient F into a rotation and a stretch indicates that any smooth deformation can be decomposed at any point inside the continuum body into a unique stretch described by ![]() followed by a unique rotation described by

followed by a unique rotation described by ![]() . For example, a circle representing the directions of all the vectors in

. For example, a circle representing the directions of all the vectors in ![]() is deformed into an ellipse under the action of

is deformed into an ellipse under the action of ![]() where

where ![]() . The decomposition

. The decomposition ![]() is schematically shown by first stretching the circle into an ellipse whose major axes are the eigenvectors of

is schematically shown by first stretching the circle into an ellipse whose major axes are the eigenvectors of ![]() followed by a rotation of the ellipse through the matrix

followed by a rotation of the ellipse through the matrix ![]() . The decomposition

. The decomposition ![]() represents rotating the circle through the matrix

represents rotating the circle through the matrix ![]() and then stretching the circle into an ellipse whose major axes are the eigenvectors of

and then stretching the circle into an ellipse whose major axes are the eigenvectors of ![]() . Notice that the eigenvectors of V and the eigenvectors of U differ by a mere rotation. In the following tool, change the values of the four components of the matrix

. Notice that the eigenvectors of V and the eigenvectors of U differ by a mere rotation. In the following tool, change the values of the four components of the matrix ![]() . The code first ensures that

. The code first ensures that ![]() . Once this condition is satisfied, the tool draws the two steps of the right polar decomposition in the first row and then the steps of the left polar decomposition in the second row. In the first image on the first row, the arrows indicate the eigenvectors of

. Once this condition is satisfied, the tool draws the two steps of the right polar decomposition in the first row and then the steps of the left polar decomposition in the second row. In the first image on the first row, the arrows indicate the eigenvectors of ![]() . The arrows are shown to deform but keep their direction in the second image of the first row. Then, after applying the rotation, the arrows rotate in the third image of the first row. In the second image of the second row, the arrows are rotated with the matrix

. The arrows are shown to deform but keep their direction in the second image of the first row. Then, after applying the rotation, the arrows rotate in the third image of the first row. In the second image of the second row, the arrows are rotated with the matrix ![]() without any change in length. Then, they are deformed using the matrix

without any change in length. Then, they are deformed using the matrix ![]() in the third image of the second row.

in the third image of the second row.

The Singular-Value Decomposition of the Deformation Gradient:

One of the general results of linear algebra is the Singular-Value Decomposition of real or complex matrices. When the statement is applied to a matrix ![]() with

with ![]() it states that

it states that ![]() can be decomposed as follows:

can be decomposed as follows:

![]()

Where, ![]() and

and ![]() are rotation matrices while the matrix

are rotation matrices while the matrix ![]() is a diagonal matrix with positive diagonal entries. The singular-value decomposition follows immediately from the previous section on the polar decomposition of the deformation gradient. By setting

is a diagonal matrix with positive diagonal entries. The singular-value decomposition follows immediately from the previous section on the polar decomposition of the deformation gradient. By setting ![]() and realizing that

and realizing that ![]() is a positive definite symmetric matrix, then using the spectral form of symmetric tensors

is a positive definite symmetric matrix, then using the spectral form of symmetric tensors ![]() can be decomposed such that

can be decomposed such that ![]() where

where ![]() is a diagonal matrix whose diagonal entries are positive and

is a diagonal matrix whose diagonal entries are positive and ![]() is a rotation matrix whose columns are the normalized eigenvectors of

is a rotation matrix whose columns are the normalized eigenvectors of ![]() (The rows of

(The rows of ![]() are the normalized eigenvectors of

are the normalized eigenvectors of ![]() ). In particular, they are the square roots of the eigenvalues of the positive definite symmetric matrix

). In particular, they are the square roots of the eigenvalues of the positive definite symmetric matrix ![]() .

.

Therefore:

![]()

By setting ![]() we get the required result:

we get the required result:

![]()

The following tool calculates the polar decomposition and the singular value decomposition of a matrix ![]() . Enter the values for the components of

. Enter the values for the components of ![]() and the tool calculates all the required matrices after checking that

and the tool calculates all the required matrices after checking that ![]() .

.

The Right Cauchy-Green Deformation Tensor:

The tensor ![]() is termed the right Cauchy-Green deformation tensor. As shown above, it is a positive definite symmetric matrix, thus, it has three positive real eigenvalues and three perpendicular eigenvectors. It also has a unique positive square root

is termed the right Cauchy-Green deformation tensor. As shown above, it is a positive definite symmetric matrix, thus, it has three positive real eigenvalues and three perpendicular eigenvectors. It also has a unique positive square root ![]() (See statement 2 above) such that

(See statement 2 above) such that ![]() has the same eigenvectors, while the eigenvalues of

has the same eigenvectors, while the eigenvalues of ![]() are the positive square roots of the eigenvalues of

are the positive square roots of the eigenvalues of ![]() . Denote

. Denote ![]() ,

, ![]() , and

, and ![]() as the eigenvalues of

as the eigenvalues of ![]() with the corresponding eigenvectors

with the corresponding eigenvectors ![]() ,

, ![]() and

and ![]() , then

, then ![]() ,

, ![]() and

and ![]() admit the representations (see the section about the representation of symmetric matrices):

admit the representations (see the section about the representation of symmetric matrices):

![]()

![]()

![]()

It is worth noting that the last expression for ![]() is equivalent to the singular-value decomposition of

is equivalent to the singular-value decomposition of ![]() described above. The singular-value decomposition,

described above. The singular-value decomposition, ![]() where

where ![]() is a rotation matrix whose columns are the eigenvectors of

is a rotation matrix whose columns are the eigenvectors of ![]() , is more convenient for component calculations, while the last expression with tensor product is much more useful for formula manipulation.

, is more convenient for component calculations, while the last expression with tensor product is much more useful for formula manipulation.

The Left Cauchy-Green Deformation Tensor:

The tensor ![]() is termed the left Cauchy-Green deformation tensor. As shown above, it is a positive definite symmetric matrix, thus, it has three positive real eigenvalues and three perpendicular eigenvectors. It also has a unique positive square root

is termed the left Cauchy-Green deformation tensor. As shown above, it is a positive definite symmetric matrix, thus, it has three positive real eigenvalues and three perpendicular eigenvectors. It also has a unique positive square root ![]() (See statement 2 above) such that

(See statement 2 above) such that ![]() has the same eigenvectors, while the eigenvalues of

has the same eigenvectors, while the eigenvalues of ![]() are the positive square roots of the eigenvalues of

are the positive square roots of the eigenvalues of ![]() . From statement 2 and statement 4 above, the eigenvalues of

. From statement 2 and statement 4 above, the eigenvalues of ![]() and

and ![]() are the same while the eigenvectors differ by the rotation

are the same while the eigenvectors differ by the rotation ![]() (why?). Denote

(why?). Denote ![]() ,

, ![]() , and

, and ![]() as the eigenvalues of

as the eigenvalues of ![]() with the corresponding eigenvectors

with the corresponding eigenvectors ![]() ,

, ![]() and

and ![]() , then

, then ![]() ,

, ![]() and

and ![]() admit the representations (see the section about the representation of symmetric matrices):

admit the representations (see the section about the representation of symmetric matrices):

![]()

![]()

![]()

By utilizing the properties of the tensor product, the following alternative representation of ![]() can be obtained:

can be obtained:

![]()

Also, since ![]() ,

, ![]() , and

, and ![]() are orthonormal, then

are orthonormal, then ![]() . Therefore:

. Therefore:

![]()

By utilizing the properties of the tensor product:

![]()

Therefore:

![]()