Introduction to Numerical Analysis: Taylor Series

Definitions

Let ![]() be a smooth (differentiable) function, and let

be a smooth (differentiable) function, and let ![]() , then a Taylor series of the function

, then a Taylor series of the function ![]() around the point

around the point ![]() is given by:

is given by:

![]()

In particular, if ![]() , then the expansion is known as the Maclaurin series and thus is given by:

, then the expansion is known as the Maclaurin series and thus is given by:

![]()

Taylor’s Theorem

Many of the numerical analysis methods rely on Taylor’s theorem. In this section, a few mathematical facts are presented (mostly without proof) which serve as the basis for Taylor’s theorem.

Extreme Values of Smooth Functions

Definition: Local Maximum and Local Minimum

Let ![]() .

. ![]() is said to have a local maximum at a point

is said to have a local maximum at a point ![]() if there exists an open interval

if there exists an open interval ![]() such that

such that ![]() and

and ![]() . On the other hand,

. On the other hand, ![]() is said to have a local minimum at a point

is said to have a local minimum at a point ![]() if there exists an open interval

if there exists an open interval ![]() such that

such that ![]() and

and ![]() . If

. If ![]() has either a local maximum or a local minimum at

has either a local maximum or a local minimum at ![]() , then

, then ![]() is said to have a local extremum at

is said to have a local extremum at ![]() .

.

Proposition 1

Let ![]() be smooth (differentiable). Assume that

be smooth (differentiable). Assume that ![]() has a local extremum (maximum or minimum) at a point

has a local extremum (maximum or minimum) at a point ![]() , then

, then ![]() .

.

This proposition simply means that if a smooth function attains a local maximum or minimum at a particular point, then the slope of the function is equal to zero at this point.

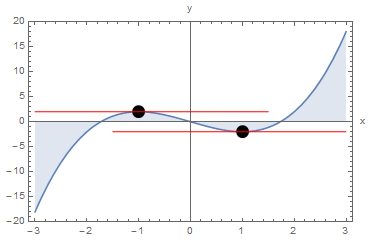

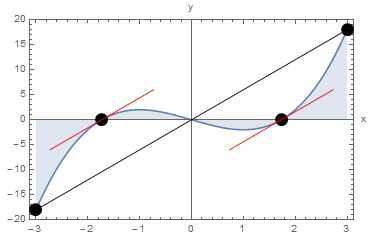

As an example, consider the function ![]() with the relationship

with the relationship ![]() . In this case,

. In this case, ![]() is a local maximum value for

is a local maximum value for ![]() attained at

attained at ![]() and

and ![]() is a local minimum value of

is a local minimum value of ![]() attained at

attained at ![]() . These local extrema values are associated with a zero slope for the function

. These local extrema values are associated with a zero slope for the function ![]() since

since

![]()

![]() and

and ![]() are locations of local extrema and for both we have

are locations of local extrema and for both we have ![]() . The red lines in the next figure show the slope of the function

. The red lines in the next figure show the slope of the function ![]() at the extremum values.

at the extremum values.

View Mathematica Code that Generated the Above Figure

Clear[x]

y = x^3 - 3 x;

Plot[y, {x, -3, 3}, Epilog -> {PointSize[0.04], Point[{-1, 2}], Point[{1, -2}], Red, Line[{{-3, 2}, {1.5, 2}}], Line[{{3, -2}, {-1.5, -2}}]}, Filling -> Axis, PlotRange -> All, Frame -> True, AxesLabel -> {"x", "y"}]

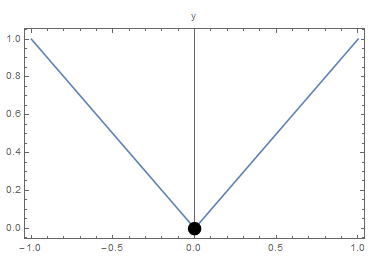

“Smoothness” or “Differentiability” is a very important requirement for the proposition to work. As an example, consider the function ![]() defined as

defined as ![]() . The function

. The function ![]() has a local minimum at

has a local minimum at ![]() , however,

, however, ![]() is not defined as the slope as

is not defined as the slope as ![]() from the right is different from the slope as

from the right is different from the slope as ![]() from the left as shown in the next figure.

from the left as shown in the next figure.

Clear[x]

y = Abs[x];

Plot[y, {x, -1, 1}, Epilog -> {PointSize[0.04], Point[{0, 0}]}, PlotRange -> All, Frame -> True, AxesLabel -> {"x", "y"}]

Proposition 2

Let ![]() be smooth (differentiable). Assume

be smooth (differentiable). Assume ![]() such that

such that ![]() . Then,

. Then,

- If

, then

, then  is constant around

is constant around  .

. - If

, then

, then  is a local minimum.

is a local minimum. - If

, then

, then  is a local maximum.

is a local maximum.

This proposition is very important for optimization problems when a local maximum or minimum is to be obtained for a particular function. In order to identify whether the solution corresponds to a local maximum or minimum, the second derivative of the function has to be evaluated. Considering the same example given above,

![]()

We have already identified ![]() and

and ![]() as locations of the local extremum values. To know whether they are local maxima or local minima, we can evaluate the second derivative at these points.

as locations of the local extremum values. To know whether they are local maxima or local minima, we can evaluate the second derivative at these points. ![]() . Therefore,

. Therefore, ![]() is the location of a local minimum, while

is the location of a local minimum, while ![]() implying that

implying that ![]() is the location of a local maximum.

is the location of a local maximum.

Extreme Value Theorem

Statement: Let ![]() be continuous. Then,

be continuous. Then, ![]() attains its maximum and its minimum value at some points

attains its maximum and its minimum value at some points ![]() and

and ![]() in the interval

in the interval ![]() .

.

The theorem simply states that if we have a continuous function on a closed interval ![]() , then the image of

, then the image of ![]() contains a maximum value and a minimum value within the interval

contains a maximum value and a minimum value within the interval ![]() . The theorem is very intuitive. However, the proof is highly technical and relies on the definitions of real numbers and on continuous functions. For now, we will just illustrate the meaning of the theorem using an example. Consider the function

. The theorem is very intuitive. However, the proof is highly technical and relies on the definitions of real numbers and on continuous functions. For now, we will just illustrate the meaning of the theorem using an example. Consider the function ![]() defined as:

defined as:

![]()

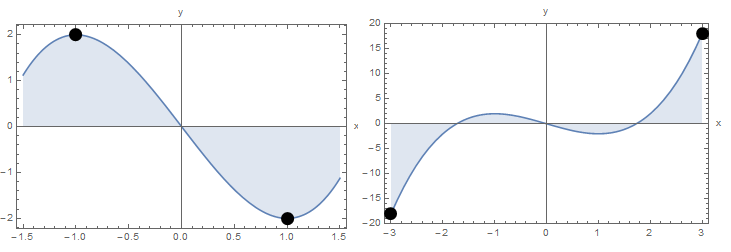

The theorem states that ![]() has to attain a maximum value and a minimum value at a point within the interval. In this case,

has to attain a maximum value and a minimum value at a point within the interval. In this case, ![]() is the maximum value of

is the maximum value of ![]() attained at

attained at ![]() and

and ![]() is the minimum value of

is the minimum value of ![]() attained at

attained at ![]() . Alternatively, if

. Alternatively, if ![]() with the same relationship as above,

with the same relationship as above, ![]() is the minimum value of

is the minimum value of ![]() attained at

attained at ![]() and

and ![]() is the maximum value of

is the maximum value of ![]() attained at

attained at ![]()

The following figure shows the graph of the function on the specified intervals.

View Mathematica Code that Generated the Above Figures

Clear[x]

y = x^3 - 3 x;

Plot[y, {x, -1.5, 1.5}, Epilog -> {PointSize[0.04], Point[{-1, 2}], Point[{1, -2}]}, Filling -> Axis, PlotRange -> All, Frame -> True, AxesLabel -> {"x", "y"}]

Plot[y, {x, -3, 3}, Epilog -> {PointSize[0.04], Point[{-3, y /. x -> -3}], Point[{3, y /. x -> 3}]}, Filling -> Axis, PlotRange -> All, Frame -> True, AxesLabel -> {"x", "y"}]

Rolle’s Theorem

Statement: Let ![]() be differentiable. Assume that

be differentiable. Assume that ![]() , then there is at least one point

, then there is at least one point ![]() where

where ![]() .

.

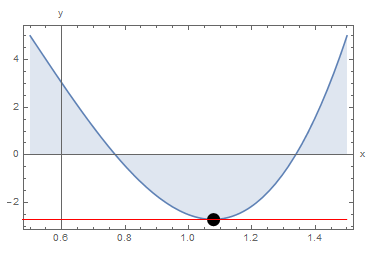

The proof of Rolle’s theorem is straightforward from Proposition 1 and the Extreme Value Theorem above. The Extreme Value Theorem ensures that there is a local maximum or local minimum within the interval, while proposition 1 ensures that at this local extremum, the slope of the function is equal to zero. As an example, consider the function ![]() defined as

defined as ![]() .

. ![]() . This ensures that there is a point

. This ensures that there is a point ![]() with

with ![]() . Indeed,

. Indeed, ![]() and the point

and the point ![]() is the location of the local minimum. The following figure shows the graph of the function on the specified interval along with the point

is the location of the local minimum. The following figure shows the graph of the function on the specified interval along with the point ![]() .

.

Clear[x]

y = 20 (x - 1/2)^3 - 20 (x - 1/2) + 5;

Expand[y]

y /. x -> 1.5

y /. x -> 0.5

y /. x -> (1/2 + 1/Sqrt[3])

D[y, x] /. x -> (1/2 + 1/Sqrt[3])

Plot[y, {x, 0.5, 1.5}, Epilog -> {PointSize[0.04], Point[{1/2 + 1/Sqrt[3], y /. x -> 1/2 + 1/Sqrt[3]}], Red, Line[{{-3, y /. x -> 1/2 + 1/Sqrt[3]}, {1.5, y /. x -> 1/2 + 1/Sqrt[3]}}]}, Filling -> Axis, PlotRange -> All, Frame -> True, AxesLabel -> {"x", "y"}]

Mean Value Theorem

Statement: Let ![]() be differentiable. Then, there is at least one point

be differentiable. Then, there is at least one point ![]() such that

such that ![]() .

.

The proof of the mean value theorem is straightforward by applying Rolle’s theorem to the function ![]() defined as:

defined as:

![]()

Clearly, ![]() satisfies the conditions of Rolle’s theorem (Differentiable and

satisfies the conditions of Rolle’s theorem (Differentiable and ![]() ). We also have:

). We also have:

![]()

Therefore, by Rolle’s theorem, there is a point ![]() such that

such that ![]() .

.

The mean value theorem states that there is a point ![]() inside the interval such that the slope of the function at

inside the interval such that the slope of the function at ![]() is equal to the average slope along the interval. The following example will serve to illustrate the main concept of the mean value theorem. Consider the function

is equal to the average slope along the interval. The following example will serve to illustrate the main concept of the mean value theorem. Consider the function ![]() defined as:

defined as:

![]()

The slope or first derivative of ![]() is given by:

is given by:

![]()

The average slope of ![]() on the interval is given by:

on the interval is given by:

![]()

The two points ![]() and

and ![]() have a slope equal to the average slope:

have a slope equal to the average slope:

![]()

The figure below shows the function ![]() on the specified interval. The line representing the average slope is shown in black connecting the points

on the specified interval. The line representing the average slope is shown in black connecting the points ![]() and

and ![]() . The red lines show the slopes at the points

. The red lines show the slopes at the points ![]() and

and ![]() .

.

Clear[x]

y = x^3 - 3 x;

averageslope = ((y /. x -> 3) - (y /. x -> -3))/(3 + 3)

dydx = D[y, x];

a = Solve[D[y, x] == averageslope, x]

Point1 = {x /. a[[1, 1]], y /. a[[1, 1]]}

Point2 = {x /. a[[2, 1]], y /. a[[2, 1]]}

Plot[y, {x, -3, 3}, Epilog -> {PointSize[0.04], Point[{-3, y /. x -> -3}], Point[{3, y /. x -> 3}], Line[{{-3, y /. x -> -3}, {3, y /. x -> 3}}], Point[Point1],Point[Point2], Red, Line[{Point1 + {-1, -averageslope}, Point1, Point1 + {1, averageslope}}], Line[{Point2 + {-1, -averageslope}, Point2, Point2 + {1, averageslope}}]}, Filling -> Axis, PlotRange -> All, Frame -> True, AxesLabel -> {"x", "y"}]

Taylor’s Theorem

As an introduction to Taylor’s Theorem, let’s assume that we have a function ![]() that can be represented as a polynomial function in the following form:

that can be represented as a polynomial function in the following form:

![]()

where ![]() is a fixed point and

is a fixed point and ![]() is a constant. The best way to find these constants is to find

is a constant. The best way to find these constants is to find ![]() and its derivatives when

and its derivatives when ![]() . So, when

. So, when ![]() we have:

we have:

![]()

Therefore, ![]() . The derivatives of

. The derivatives of ![]() have the form:

have the form:

![Rendered by QuickLaTeX.com \[\begin{split} f'(x)&=b_1 +2b_2(x-a)+3b_3(x-a)^2+4b_4(x-a)^3+\cdots + nb_n(x-a)^{(n-1)}+\cdots\\ f''(x)&=2b_2+2\times 3b_3(x-a)+3\times 4b_4(x-a)^2+\cdots + (n-1)nb_n(x-a)^{(n-2)}+\cdots\\ \cdots\\ f^{(n)}(x)&=n!b_n+\cdots\\ \end{split} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-aef1e256eb73ba98e49d14c32698df72_l3.png)

The derivatives of ![]() when

when ![]() have the form:

have the form:

![Rendered by QuickLaTeX.com \[\begin{split} f'(a)&=b_1 +2b_2(a-a)+3b_3(a-a)^2+4b_4(a-a)^3+\cdots + nb_n(a-a)^{(n-1)}+\cdots\\ f''(a)&=2b_2+2\times 3b_3(a-a)+3\times 4b_4(a-a)^2+\cdots + (n-1)nb_n(a-a)^{(n-2)}+\cdots\\ \cdots\\ f^{(n)}(a)&=n!b_n+\cdots \end{split} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-5c78de56db548af2ba738db42b0b465a_l3.png)

Therefore, ![]() :

:

![]()

The above does not really serve as a rigorous proof for Taylor’s Theorem but rather an illustration that if an infinitely differentiable function can be represented as the sum of an infinite number of polynomial terms, then, the Taylor series form of a function defined at the beginning of this section is obtained. The following is the exact statement of Taylor’s Theorem:

Statement of Taylor’s Theorem: Let ![]() be

be ![]() times differentiable on an open interval

times differentiable on an open interval ![]() . Let

. Let ![]() . Then,

. Then, ![]() between

between ![]() and

and ![]() such that:

such that:

![]()

Explanation and Importance: Taylor’s Theorem has numerous implications in analysis in engineering. In the following we will discuss the meaning of the theorem and some of its implications:

- Simply put, Taylor’s Theorem states the following: if the function

and its

and its  derivatives are known at a point

derivatives are known at a point  , then, the function at a point

, then, the function at a point  away from

away from  can be approximated by the value of the Taylor’s approximation

can be approximated by the value of the Taylor’s approximation  :

:

![Rendered by QuickLaTeX.com \[ f(x)\approx P_n(x)=f(a)+f'(a) (x-a)+\frac{f''(a)}{2!}(x-a)^2+\frac{f'''(a)}{3!}(x-a)^3+\cdots+\frac{f^{(n)}(a)}{n!}(x-a)^n \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-3e6963f7993050f672196bfc4d89a595_l3.png)

The error (difference between the approximation

and the exact

and the exact  is given by:

is given by:![Rendered by QuickLaTeX.com \[ E=f(x)-P_n(x)=\frac{f^{(n+1)}(\xi)}{(n+1)!}(x-a)^{n+1} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-6f724adb2dc1a728869c8daeb5dbc971_l3.png)

The term

is bounded since

is bounded since  is a continuous function on the interval from

is a continuous function on the interval from  to

to  . Therefore, when

. Therefore, when  , the upper bound of the error can be given as:

, the upper bound of the error can be given as:![Rendered by QuickLaTeX.com \[ |E|\leq \max_{\xi\in[a,x]}\frac{|f^{(n+1)}(\xi)|}{(n+1)!}(x-a)^{n+1} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-53ac158a8b121ea4c8c733f03fc9d67d_l3.png)

While, when

, the upper bound of the error can be given as:

, the upper bound of the error can be given as:![Rendered by QuickLaTeX.com \[ |E|\leq \max_{\xi\in[x,a]}\frac{|f^{(n+1)}(\xi)|}{(n+1)!}(a-x)^{n+1} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-cd230603849c1fab8ff4f1819210e9a5_l3.png)

The above implies that the error is directly proportional to

. This is traditionally written as follows:

. This is traditionally written as follows:![Rendered by QuickLaTeX.com \[ f(x)=P_n(x)+\mathcal{O} (h^{n+1}) \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-8c78a80f7e45aa18a6b1ff56c070cc36_l3.png)

where

. In other words, as

. In other words, as  gets smaller and smaller, the error gets smaller in proportion to

gets smaller and smaller, the error gets smaller in proportion to  . As an example, if we choose

. As an example, if we choose  and then

and then  , then,

, then,  . I.e., if the step size is halved, the error is divided by

. I.e., if the step size is halved, the error is divided by  .

. - If the function

![Rendered by QuickLaTeX.com f:[c,d]\rightarrow \mathbb{R}](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-8fcfc289aafe81978155b67b9cedfca2_l3.png) is infinitely differentiable on an interval

is infinitely differentiable on an interval  , and if

, and if  , then

, then  is the limit of the sum of the Taylor series. The error which is the difference between the infinite sum and the approximation is called the truncation error as defined in the error section.

is the limit of the sum of the Taylor series. The error which is the difference between the infinite sum and the approximation is called the truncation error as defined in the error section. - There are many rigorous proofs available for Taylor’s Theorem and the majority rely on the mean value theorem above. Notice that if we choose

, then the mean value theorem is obtained. For a rigorous proof, you can check one of these links: link 1 or link 2. Note that these proofs rely on the mean value theorem. In particular, L’Hôpital’s rule was used in the Wikipedia proof which in turn relies on the mean value theorem.

, then the mean value theorem is obtained. For a rigorous proof, you can check one of these links: link 1 or link 2. Note that these proofs rely on the mean value theorem. In particular, L’Hôpital’s rule was used in the Wikipedia proof which in turn relies on the mean value theorem.

The following code illustrates the difference between the function ![]() and the Taylor’s polynomial

and the Taylor’s polynomial ![]() . You can download the code, change the function, the point

. You can download the code, change the function, the point ![]() , and the range of the plot to see how the Taylor series of other functions behave.

, and the range of the plot to see how the Taylor series of other functions behave.

Taylor[y_, x_, a_, n_] := (y /. x -> a) +

Sum[(D[y, {x, i}] /. x -> a)/i!*(x - a)^i, {i, 1, n}]

f = Sin[x] + 0.01 x^2;

Manipulate[

s = Taylor[f, x, 1, nn];

Grid[{{Plot[{f, s}, {x, -10, 10}, PlotLabel -> "f(x)=Sin[x]+0.01x^2",

PlotLegends -> {"f(x)", "P(x)"},

PlotRange -> {{-10, 10}, {-6, 30}},

ImageSize -> Medium]}, {Expand[s]}}], {nn, 1, 30, 1}]

The Mathematica function Series can also be used to generate the Taylor expansion of any function:

View Mathematica Code

Series[Tan[x],{x,0,7}]

Series[1/(1+x^2),{x,0,10}]

The following tool shows how the Taylor series expansion around the point ![]() , termed

, termed ![]() in the figure, can be used to provide an approximation of different orders to a cubic polynomial, termed

in the figure, can be used to provide an approximation of different orders to a cubic polynomial, termed ![]() in the figure. Use the slider to change the order of the series expansion. The tool provides the error at

in the figure. Use the slider to change the order of the series expansion. The tool provides the error at ![]() , namely

, namely ![]() . What happens when the order reaches 3?

. What happens when the order reaches 3?

Applications and Examples

MacLaurin Series

The following are the MacLaurin series for some basic infinitely differentiable functions:

![Rendered by QuickLaTeX.com \[\begin{split} e^x&=1+x+\frac{x^2}{2!}+\frac{x^3}{3!}+\cdots = \sum_{n=0}^\infty \frac{x^n}{n!}\\ \sin{x}&=x-\frac{x^3}{3!}+\frac{x^5}{5!}-\cdots = \sum_{n=0}^\infty \frac{(-1)^{n}x^{2n+1}}{(2n+1)!}\\ \cos{x}&=1-\frac{x^2}{2!}+\frac{x^4}{4!}-\cdots = \sum_{n=0}^\infty \frac{(-1)^{n}x^{2n}}{(2n)!} \end{split} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-6422a3e6e7ea2c1164307e3d60432022_l3.png)

Numerical Differentiation

Taylor’s Theorem provides a means for approximating the derivatives of a function. The first-order Taylor approximation of a function ![]() is given by:

is given by:

![]()

where ![]() is the step size. Then, :

is the step size. Then, :

![]()

Forward finite-difference

If we use the Taylor series approximation to estimate the value of the function ![]() at a point

at a point ![]() knowing the values at point

knowing the values at point ![]() then,we have:

then,we have:

(1) ![]()

where ![]() . In this case, the forward finite-difference can be used to approximate

. In this case, the forward finite-difference can be used to approximate ![]() as follows:

as follows:

![]()

Backward finite-difference

If we use the Taylor series approximation to estimate the value of the function ![]() at a point

at a point ![]() knowing the values at point

knowing the values at point ![]() then, we have:

then, we have:

(2) ![]()

where ![]() . In this case, the backward finite-difference can be used to approximate

. In this case, the backward finite-difference can be used to approximate ![]() as follows:

as follows:

![]()

Centred Finite Difference

The centred finite difference can provide a better estimate for the derivative of a function at a particular point. If the values of a function ![]() are known at the points

are known at the points ![]() and

and ![]() , then, we can use the Taylor series to find a good apprxoimation for the derivative as follows:

, then, we can use the Taylor series to find a good apprxoimation for the derivative as follows:

![Rendered by QuickLaTeX.com \[ \begin{split} f(x_{i+1})&=f(x_i)+f'(x_i) h+\frac{f''(x_i)}{2!}h^2+\mathcal{O}(h^3)\\ f(x_{i-1})&=f(x_i)+f'(x_i)(-h)+\frac{f''(x_i)}{2!}h^2+\mathcal{O}(h^3) \end{split} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-00e8cc7cdc58c1bc30c47e0912a8d31d_l3.png)

Subtracting the above two equations and dividing by ![]() gives the following:

gives the following:

![]()

Building Differential Equations

See momentum balance and beam approximation for examples where the Taylor’s approximation is used to build a differential equation.

Approximating Continuous Functions

Taylor’s theorem essentially discusses approximating differentiable functions using polynomials. The approximation can be as close as needed by adding more polynomial terms and/or by ensuring that the step size ![]() is small enough. It is important to realize that polynomial approximations are valid for continuous functions that are not necessarily differentiable at every point. Let

is small enough. It is important to realize that polynomial approximations are valid for continuous functions that are not necessarily differentiable at every point. Let ![]() , then the Stone-Weierstrass Theorem states that for any small number

, then the Stone-Weierstrass Theorem states that for any small number ![]() a polynomial function such that the biggest difference between

a polynomial function such that the biggest difference between ![]() . In other words, we can always find a polynomial function that approximates any continuous function on the interval

. In other words, we can always find a polynomial function that approximates any continuous function on the interval ![]() within any degree of accuracy sought! This fact is extremely important in all engineering applications. Essentially, it allows modelling any continuous functions using polynomials. In other words, if a problem wishes to find the distribution of a variable (stress, strain, velocity, density, etc…) as a function of position, and if this variable is continuous, then, in the majority of applications we can assume differentiability and a Taylor approximation for the unknown function.

within any degree of accuracy sought! This fact is extremely important in all engineering applications. Essentially, it allows modelling any continuous functions using polynomials. In other words, if a problem wishes to find the distribution of a variable (stress, strain, velocity, density, etc…) as a function of position, and if this variable is continuous, then, in the majority of applications we can assume differentiability and a Taylor approximation for the unknown function.

Examples

Example 1

Apply Taylor’s Theorem to the function ![]() defined as

defined as ![]() to estimate the value of

to estimate the value of ![]() . Use

. Use ![]() . Estimate an upper bound for the error.

. Estimate an upper bound for the error.

Solution

The Taylor approximation of the function ![]() around the point

around the point ![]() is given as follows:

is given as follows:

![]()

If ![]() terms are used (including

terms are used (including ![]() ), then the upper bound for the error is:

), then the upper bound for the error is:

![]()

With ![]() , an upper bound for the error can be calculated by assuming

, an upper bound for the error can be calculated by assuming ![]() . That’s because the absolute values of the derivatives of

. That’s because the absolute values of the derivatives of ![]() attain their maximum value in the interval

attain their maximum value in the interval ![]() at 4. The derivatives of

at 4. The derivatives of ![]() have the following form:

have the following form:

![Rendered by QuickLaTeX.com \[\begin{split} f'(x)&=\frac{1}{2\sqrt{x}}\\ f''(x)&=\frac{-1}{4x^{\frac{3}{2}}}=\frac{-1}{4x\sqrt{x}}\\ f'''(x)&=\frac{3}{8x^{\frac{5}{2}}}=\frac{3}{8x^2\sqrt{x}}\\ f''''(x)&=\frac{-15}{16x^{\frac{7}{2}}}=\frac{-15}{16x^3\sqrt{x}} \end{split} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-9a8fdd6b5e8ef72e4ebdcf3a27418764_l3.png)

The derivatives of the function evaluated at the point ![]() can be calculated as:

can be calculated as:

![Rendered by QuickLaTeX.com \[\begin{split} f'(4)&=\frac{1}{4}\\ f''(4)&=\frac{-1}{32}\\ f'''(4)&=\frac{3}{256}\\ f''''(4)&=\frac{-15}{2048} \end{split} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-f8b84ec6483460ea038f8902879991f4_l3.png)

If two terms are used, then:

![]()

In this case, the upper bound for the error is:

![]()

Using Mathematica, the square root of ![]() approximated to 4 decimal places is

approximated to 4 decimal places is ![]() . Therefore, the error when two terms are used is:

. Therefore, the error when two terms are used is:

![]()

which satisfies that the actual error is less the upper bound:

![]()

If three terms are used, then:

![]()

In this case, the upper bound for the error is:

![]()

The actual error in this case is indeed less than the upper bound:

![]()

The following code provides a user-defined function for the Taylor series having the following inputs: a function ![]() , the value of

, the value of ![]() , the value of

, the value of ![]() , and the number of terms

, and the number of terms ![]() including the constant term.

including the constant term.

View Mathematica Code

Clear[x]

Taylor[f_, xi_, a_, n_] := Sum[(D[f, {x, i}] /. x -> a)/i!*(xi - a)^i, {i, 0, n - 1}]

f = (x)^(0.5);

a = Table[{i, Taylor[f, 5, 4, i]}, {i, 2, 21}];

a // MatrixForm

Example 2

Apply Taylor’s Theorem to the function ![]() defined as

defined as ![]() to estimate the value of

to estimate the value of ![]() and

and ![]() . Use

. Use ![]() . Estimate an upper bound for the error.

. Estimate an upper bound for the error.

Solution

First, we will calculate the numerical solution for the ![]() and

and ![]() :

:

![]()

The Taylor approximation around ![]() is given as:

is given as:

![]()

If ![]() terms are used (including

terms are used (including ![]() ) and if

) and if ![]() , then the upper bound of the error is:

, then the upper bound of the error is:

![]()

If ![]() , then, the upper bound of the error is:

, then, the upper bound of the error is:

![]()

The derivatives of the function are given by:

![Rendered by QuickLaTeX.com \[ \begin{split} f(x)&=(1-x)^{0.5}\\ f'(x)&=-\frac{0.5}{(1-x)^{0.5}}\\ f''(x)&=-\frac{0.25}{(1-x)^{1.5}}\\ f'''(x)&=-\frac{0.375}{(1-x)^{2.5}}\\ f''''(x)&=-\frac{0.9375}{(1-x)^{3.5}}\\ f^{(5)}(x)&=-\frac{3.28125}{(1-x)^{4.5}}\\ f^{(6)}(x)&=-\frac{14.7656}{(1-x)^{5.5}}\\ f^{(7)}(x)&=-\frac{81.2109}{(1-x)^{6.5}}\\ f^{(8)}(x)&=-\frac{527.871}{(1-x)^{7.5}} \end{split} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-190593249346463c20dfff83f8444274_l3.png)

when evaluated at ![]() these have the values:

these have the values:

![Rendered by QuickLaTeX.com \[\begin{split} f(0)&=1\\ f'(0)&=-0.5\\ f''(0)&=-0.25\\ f'''(0)&=-0.375\\ f''''(0)&=-0.9375\\ f^{(5)}(0)&=-3.28125\\ f^{(6)}(0)&=-14.7656\\ f^{(7)}(0)&=-81.2109\\ f^{(8)}(0)&=-527.871 \end{split} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-c0ee12ba22152bd151f097e4b85885e4_l3.png)

For ![]() , and using two terms:

, and using two terms:

![]()

Using three terms:

![]()

Using four terms:

![Rendered by QuickLaTeX.com \[ \begin{split} f(0.1)&\approx f(0)+f'(0)(0.1)+\frac{f''(0)}{2!}(0.1)^2+\frac{f'''(0)}{3!}(0.1)^3\\ &=1-0.5\times 0.1-\frac{0.25}{2}0.1^2-\frac{0.375}{3\times 2}0.1^3 = 0.9486875 \end{split} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-47eba30c9945bc0e80fb8568bc52b531_l3.png)

The terms are getting closer to each other and four terms provide a good approximation. The error term in the theorem gives an upper bound for ![]() as follows:

as follows:

![]()

The maximum value would be obtained for ![]() . Therefore:

. Therefore:

![]()

Indeed, the actual value of the error is less than the upper bound:

![]()

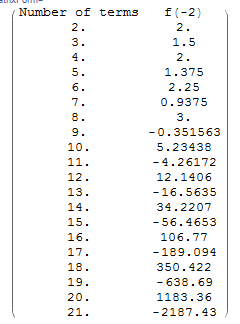

For ![]() , the Taylor series for this function around

, the Taylor series for this function around ![]() doesn’t give a very good approximation as will be shown here but rather keeps oscillating. First, using two terms:

doesn’t give a very good approximation as will be shown here but rather keeps oscillating. First, using two terms:

![]()

Using three terms:

![]()

Using four terms:

![Rendered by QuickLaTeX.com \[\begin{split} f(-2)&\approx f(0)+f'(0)(-2)+\frac{f''(0)}{2!}(-2)^2+\frac{f'''(0)}{3!}(-2)^3\\ &=1-0.5\times (-2)-\frac{0.25}{2}(-2)^2-\frac{0.375}{3\times 2}(-2)^3 \\ &= 2 \end{split} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-2aa35475bf33994fc195580a12b50fa1_l3.png)

Using five terms:

![Rendered by QuickLaTeX.com \[\begin{split} f(-2)&\approx f(0)+f'(0)(-2)+\frac{f''(0)}{2!}(-2)^2+\frac{f'''(0)}{3!}(-2)^3+\frac{f''''(0)}{4!}(-2)^4\\ &=1-0.5\times (-2)-\frac{0.25}{2}(-2)^2-\frac{0.375}{3\times 2}(-2)^3 -\frac{0.9375}{4\times 3\times 2}(-2)^4\\ &= 1.375 \end{split} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-81ea35a827bebb3575f0d9f774307ea7_l3.png)

Using six terms:

![Rendered by QuickLaTeX.com \[\begin{split} f(-2)&\approx f(0)+f'(0)(-2)+\frac{f''(0)}{2!}(-2)^2+\frac{f'''(0)}{3!}(-2)^3+\frac{f''''(0)}{4!}(-2)^4+\frac{f'''''(0)}{5!}(-2)^5\\ &=1-0.5\times (-2)-\frac{0.25}{2}(-2)^2-\frac{0.375}{3\times 2}(-2)^3 -\frac{0.9375}{4\times 3\times 2}(-2)^4-\frac{-3.28125}{5\times 4\times 3\times 2}(-2)^5\\ &=2.25 \end{split} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-b26118a8eda6e7a40d09ebaf1a188ad6_l3.png)

In fact, the following table gives the values up to 21 terms. It is clear that the Taylor series is diverging.

The error term in the theorem gives an upper bound for ![]() when six terms are used as follows:

when six terms are used as follows:

![]()

The maximum value will be obtained when ![]() :

:

![]()

This is a large upper bound and indicates that using six terms is not giving a good approximation. In general, the Taylor series works best if the distance between ![]() and

and ![]() is as small as possible. For some functions, like

is as small as possible. For some functions, like ![]() ,

, ![]() , and

, and ![]() , the Taylor series always converges. However, for functions with square roots, the Taylor series converges when

, the Taylor series always converges. However, for functions with square roots, the Taylor series converges when ![]() is relatively close to

is relatively close to ![]() . There are some analytical conditions that would indicate the radius of convergence (x-a) of a Taylor series; however, this is beyond the scope of this course!

. There are some analytical conditions that would indicate the radius of convergence (x-a) of a Taylor series; however, this is beyond the scope of this course!

The following code provides a user-defined function for the Taylor series having the following inputs: a function ![]() , the value of

, the value of ![]() , the value of

, the value of ![]() , and the number of terms

, and the number of terms ![]() including the constant term.

including the constant term.

View Mathematica Code

Clear[x]

Taylor[f_, xi_, a_, n_] := Sum[(D[f, {x, i}] /. x -> a)/i!*(xi - a)^i, {i, 0, n-1}]

f = (1 - x)^(0.5);

a = Table[{i, Taylor[f, -2, 0, i]}, {i, 2, 21}];

a // MatrixForm

a = Table[{i, Taylor[f, 0.1, 0, i]}, {i, 2, 21}];

a // MatrixForm

Example 3

Use zero through fourth order Taylor’s series expansion to approximate the value of the function ![]() defined as

defined as ![]() at

at ![]() . Use

. Use ![]() . Calculate the error associated with each expansion.

. Calculate the error associated with each expansion.

Solution

The true value of the function at ![]() is given by:

is given by:

![]()

The zero order Taylor series expansion around ![]() has the following form:

has the following form:

![]()

The error in this case is given by:

![]()

The first order Taylor series expansion around ![]() has the following form:

has the following form:

![]()

The error in this case is given by:

![]()

The second order Taylor series expansion around ![]() has the following form:

has the following form:

![]()

The error in this case is given by:

![]()

The third order Taylor series expansion around ![]() has the following form:

has the following form:

![Rendered by QuickLaTeX.com \[\begin{split} f(1)& \approx f(0)+(1-0)f'(0)+\frac{(1-0)^2}{2!}f''(0)+\frac{(1-0)^3}{3!}f'''(0)\\ & = 1.2+(1-0)(-0.25)+\frac{1}{2}(-1)+\frac{1}{6}(-0.9)\\ &=0.3 \end{split} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-a6abb7413f69594e5072184e1223ad25_l3.png)

The error in this case is given by:

![]()

The fourth order Taylor series expansion around ![]() has the following form:

has the following form:

![Rendered by QuickLaTeX.com \[\begin{split} f(1)&\approx f(0)+(1-0)f'(0)+\frac{(1-0)^2}{2!}f''(0)+\frac{(1-0)^3}{3!}f'''(0)+\frac{(1-0)^4}{4!}f''''(0)\\ & = 1.2+(1-0)(-0.25)+\frac{1}{2}(-1)+\frac{1}{6}(-0.9)+\frac{1}{24}(-2.4)\\ & =0.2 \end{split} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-7c26a136b68adec2c4635c8a6d848aa5_l3.png)

The error in this case is given by:

![]()

It is important to note that the error reduces to zero when using the fourth order Taylor series approximation. That is because the fourth order Taylor series approximation of a fourth order polynomial function is identical to the function itself. You can think of this as follows, the zero order Taylor approximation provides a “constant” function approximation. The second order Taylor approximation provides a parabolic function approximation while the third order provides a cubic function approximation. The nth Taylor series approximation of a polynomial of degree “n” is identical to the function being approximated!

Problems

- Use the Taylor series for the function

defined as

defined as  to estimate the value of

to estimate the value of  . Use

. Use  once and

once and  for another time. Estimate an upper bound for the error for each approximation. Comment on the behaviour of the Taylor series of this function. (hint: For this particular function using a Taylor expansion around

for another time. Estimate an upper bound for the error for each approximation. Comment on the behaviour of the Taylor series of this function. (hint: For this particular function using a Taylor expansion around  should not give a proper approximation for

should not give a proper approximation for  because 10 and 4 are far from each other)

because 10 and 4 are far from each other) - Using the Taylor series and setting

, derive the polynomial forms of the functions listed in the MacLaurin series section.

, derive the polynomial forms of the functions listed in the MacLaurin series section. - Use Taylor’s Theorem to find an estimate for

at

at  with

with  . Employ the zero-, first-,

. Employ the zero-, first-,

second-, and third-order versions and compute the truncation error for each case. - Using the MacLaurin series expansion for

, find an approximation for

, find an approximation for  as a function of the number of terms up to 5 terms. For each case, find the relative error

as a function of the number of terms up to 5 terms. For each case, find the relative error  and the relative approximate error

and the relative approximate error  .

. - Use zero- through third-order Taylor series expansions to predict

for

for

![Rendered by QuickLaTeX.com \[ f(x) = 25x^3-6x^2+7x-88 \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-e0e7f2317b87a88441b09ebd97a20210_l3.png)

assuming that

. Compute

. Compute  for each approximation.

for each approximation. - Derive the MacLaurin series for the following functions:

![Rendered by QuickLaTeX.com \[ f_1(x)=\frac{1}{1+x}\quad f_2(x)=\frac{1}{1-x}\quad f_3(x)=\frac{1}{1+x^2} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-442dc2657391635b9800270f31f1253a_l3.png)

Note: The resulting series are only convergent for

- Derive the MacLaurin series for the following functions:

![Rendered by QuickLaTeX.com \[ f_1(x)=\ln{(1+x)}\quad \quad f_2(x)=\tan^{-1}{x} \]](https://engcourses-uofa.ca/wp-content/ql-cache/quicklatex.com-d86201ed55f9cca5ae501ff687de9e10_l3.png)

Note: The resulting series are only convergent for