Python Tutorial with YOLO Implementation: Machine Learning: Identifying Objects

Overview

Please note that this is one way of identifying objects using Machine Learning in Python. For my example below, I will be using the python module ‘OpenCV’ and using the data sets of “YOLOv3-320: with the “Coco” naming set.

Link to YOLO website and coco.name file.

Link to my Google Colab code.

Modules used:

- cv2

- numpy

- os

- glob

Accessing Files

Since Google Colab uses a cloud service, it does not have access to your cloud driver. The program below lets you access your drive. Run the code, click the link, and then paste the code you get inside the input. The path will be “/content/gdrive/”.

from google.colab import drive

drive.mount("gdrive") Converting Video File to Images

In Google Colab, live capturing from cameras are disabled, so we will just process the program using images instead. First, we need to use the function ‘cv.VideoCapture()’ with the parameters being the path to the file. Now we will take a frame of that video using the function ‘capture.read()’ and save it to the directory (in this case it will be saved under “/content/”).

import cv2 as cv

from google.colab.patches import cv2_imshow

capture = cv.VideoCapture("example.mp4")

isTrue, frame = capture.read()

count = 0

while isTrue:

isTrue, frame = capture.read()

if not isTrue:

break

cv.imwrite("frame%03d.jpg" %count, frame)

count += 1Import our Class IDs

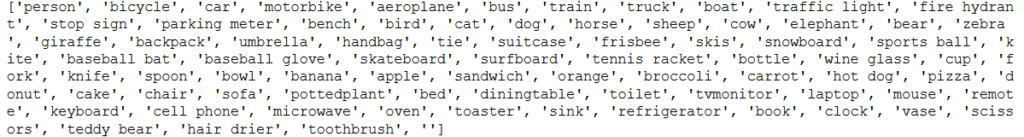

Next is to import the file that will be consisting of our weights, config, and names. Since we are using YOLO3, we will be using the coco set consisting of 80 common objects like “person”, “cup”, “cell phone”, etc. The weights themselves are indexed and do not have a name associated with that index, so we will be making that below.

We will first open the text file name ‘coco.names’ using ‘with open() as f’ with the first parameter being the file name and the second parameter being the read type. The variable ‘classNames’ will store a list consisting of the names in the correct index. ‘f.read()’ just reads the whole file as a string, then ‘.split’ splits the string into a list separated by the newline.

importClassNames = "coco.names"

classNames = []

with open(importClassNames, "r") as f:

classNames = f.read().split("\n")If I were to print the output of ‘classNames’ after running this code, I get an output looking like this:

This is what we want since the list is in order and indexed.

Implementing Machine Learning Algorithm

Next, we will create the machine learning algorithm using the two files found on this website. The machine-learning algorithm uses a deep neural network (dnn). We will be using the variable ‘net’ later.

import cv2 as cv

modelConfiguation = "yolov3.cfg"

modelWeights = "yolov3.weights"

net = cv.dnn.readNet(modelConfiguation, modelWeights)Converting ‘frame’ to be Used in the Algorithm

Right now the picture cannot be processed in the machine learning algorithm, So we will convert the frame into something called a ‘blob’. ‘cv.dnn.blobFromImage()’ takes in a few parameters: image, scale factor, size, mean, swap RB, crop.

We will then send the input of the blob into the network. The ‘layerNames’ variable gets all the variable names of our layers in the network.

Next, we have to extract the output layers using the function ‘network.getUnconnectedOutlayers()’. Note that we are just getting the index of the output, so we will use the index and refer it back to the ‘layerNames’. Since the network does not use the index ‘0’ and starts the index at ‘1’, and our output names start at ‘0’, we will shift the index by ‘-1’.

Next, we will make a variable called ‘outputs’ that forwards the ‘outputNames’ to the network using the function ‘net.forward’.

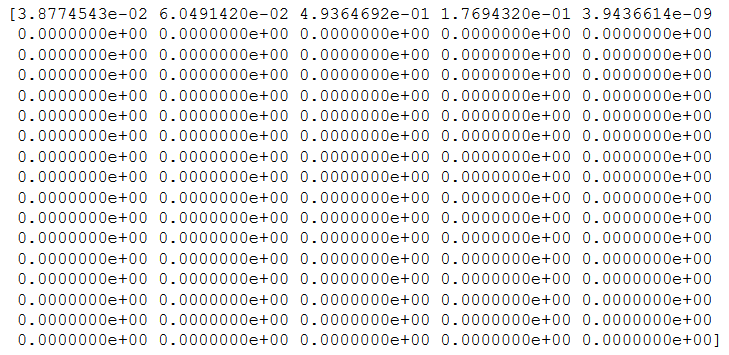

Now the network has a bunch of values including the bounding box, as well as its percentages, so the next part of the programs start filtering those results to be displayed later.

Outputs will be the results we will need to display results.

def getOutputs(frame):

blob = cv.dnn.blobFromImage(frame, 1/255, # --->

(widthheight, widthheight), [0, 0, 0], 1, crop = False)

net.setInput(blob)

layerNames = net.getLayerNames()

outputNames = [layerNames[i[0] - 1] # --->

for i in net.getUnconnectedOutLayers()]

outputs = net.forward(outputNames)

return outputsAn example of the output looks something like this:

With 85 values per output.

Creating What to be Displayed

Now that we have the results, we will now make an algorithm to display the highest probability of that object in the bounding box. First, note that the output consists of 85 values instead of 80 from our name list. The first 5 values are as followed: center x, center y, width, height, confidence object present. The rest of the 80 values are the probability of that object displayed. We will make a new variable ‘scores‘ consisting of only the probability of those objects. We will then get the ‘classID’ of the max probability of that object. We will also get that probability value by finding the index of the scores and put it in ‘confidence’.

The next thing is to check if the probability of that object is higher than our threshold. If it is we will make a bounding box for it and append the confidence value and class ID to our lists. To make a bounding box, we will need 4 variables: x and y coordinates, the width, and the height of the bounding box. The code below edits the center x and y coordinate to the respective coordinate.

The variable ‘outputBox’ removes unnecessary boxes, and this is controlled by the variable ‘nmsThreshold’. We will finally display the boxes to the window using ‘cv.rectangle()’, ‘cv.putText()’, as shown below iterating through ‘outputBox’. ‘cv.rectangle()’ takes in the image, first coordinate, second coordinate, color, and the thickness. ‘cv.putText()’ has a few parameters like the image, displayed text, coordinate, font, font size, color, thickness. We have calculated the coordinates for the boxes to be displayed from the bounding boxes, and the displayed text from the other lists.

import numpy as np

def displayBox(outputs):

height, width, channels = frame.shape

boundingBox = []

classIDs = []

confidenceValues = []

for output in outputs:

for values in output:

scores = values[5:]

classID = np.argmax(scores)

confidence = scores[classID]

if confidence > confidenceThreshold:

w, h = int(values[2] * width), int(values[3] * height)

x, y = int(values[0] * width - w/2), # --->

int(values[1] * height - h/2)

boundingBox.append([x, y, w, h])

classIDs.append(classID)

confidenceValues.append(float(confidence))

outputBox = cv.dnn.NMSBoxes(boundingBox, confidenceValues, # --->

confidenceThreshold, nmsThreshold)

for i in outputBox:

index = i[0]

color = colors[classIDs[index]]

x, y, w, h = boundingBox[index][:4]

cv.rectangle(frame, (x, y), (x + w, y + h), color, 2)

label = str(classNames[classIDs[index]].capitalize()) # --->

+ " " + str(int(confidenceValues[index] * 100)) + " %"

cv.putText(frame, label,(x + 5, y + 16), font, 0.5, color, 2)Main Function

We will first list off all of our constants here. This is so that we can easily change it later. We will now get all the files using the path ‘/content/’ followed by ‘*.*’ to get all the files in that directory. We will sort them in order of the frame number and now display the file name and the picture with the algorithm for all the frames.

The function ‘glob.glob()‘ gets an unsorted file list of that path directory.

‘Sorted()’ sorts the list into a sorted list by the time the file was made, which is the same as ordering them by number. The ‘frameSize’ is the size of ‘frame’ being used in the network. If this value is smaller, it will take less time for the frame to be processed, sacrificing accuracy. Inside the for loop, I have used “.strip()”. This basically strips the string from the inputted string. In this case, it just removes “/content/” and outputs just the file name. “cv.imread()” captures the image of the file. ‘cv.resize()’ resizes the frame. ‘displayBox()’ and ‘getOuputs‘ are the two functions we have implemented above. ‘cv2_imshow()’ displays the image onto the output. This is the replacement of ‘cv2.imshow()’ since it is disabled in Google Colab.

import numpy as np

import cv2 as cv

from google.colab.patches import cv2_imshow

import glob

import os

widthheight = 320

confidenceThreshold = 0.5

nmsThreshold = 0.6

font = cv.FONT_HERSHEY_SIMPLEX

colors = np.random.uniform(0, 255, size = (len(classNames),3))

path = "/content/"

pathFile = path + "*.*"

files = sorted(glob.glob(pathFile), key = os.path.getmtime)

frameSize = 0.3

for f in files:

print(f.strip(path))

frame = cv.imread(f)

frame = cv.resize(frame, None, fx = frameSize , fy = frameSize)

displayBox(getOutputs(frame))

cv2_imshow(frame)If you want to only input a single image the algorithm below will do that. Change the file path under the variable ‘path’.

import numpy as np

import cv2 as cv

from google.colab.patches import cv2_imshow

import glob

import os

widthheight = 320

confidenceThreshold = 0.5

nmsThreshold = 0.6

font = cv.FONT_HERSHEY_SIMPLEX

colors = np.random.uniform(0, 255, size = (len(classNames),3))

path = "image.jpg"

frame = cv.imread(path)

outputs = getOutputs(frame)

displayBox(outputs)

cv2_imshow(frame)References

Pysource: Yolo3 Tutorial, 37 min.